Many people assume that CUDA is mandatory for AI.

So a common question arises:

“If CUDA is essential for AI,

how can Apple M-series chips run AI without CUDA at all?”

In practice, you may have noticed that:

- AI inference runs on a MacBook

- Some models perform surprisingly well

- Yet there is no CUDA anywhere in sight

This article explains why Apple doesn’t need CUDA, by looking at design goals and system architecture.

Short Answer (One Sentence)

Apple does not use CUDA because it chose a fully integrated, end-to-end AI architecture optimized for on-device AI—not large-scale training.

It’s not that Apple avoids acceleration.

It accelerates AI differently.

What CUDA Is Designed For

CUDA is a GPU computing platform developed by NVIDIA.

Its primary purpose is to:

- Accelerate massive matrix computations

- Enable large-scale AI training

- Power data centers and workstations

📌 Key point:

CUDA is designed primarily for large-scale model training.

Apple’s AI Goals Are Fundamentally Different

From the beginning, Apple optimized M-series chips for:

- On-device AI

- Real-time inference

- Low power consumption

- Tight integration across devices

👉 Apple focuses less on “How do we train trillion-parameter models?”

👉 And more on “How do we run AI smoothly on personal devices?”

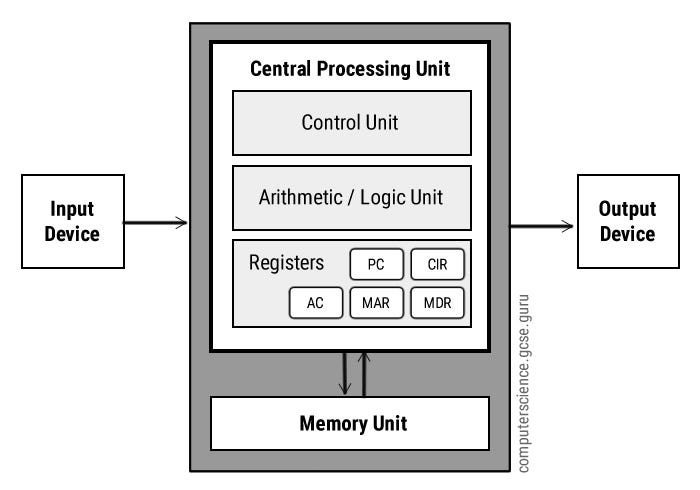

Apple M-Series: More Than Just CPU + GPU

Unlike traditional systems, M-series chips integrate:

- CPU

- GPU

- Neural Processing Unit (Neural Engine)

- Memory controller

All on a single system-on-a-chip (SoC).

Neural Engine: Purpose-Built for AI Inference

The Neural Engine (NPU) is Apple’s dedicated AI accelerator:

- Designed specifically for neural networks

- Extremely power-efficient

- Very fast for supported operations

📌 Important distinction:

The Neural Engine is not a general-purpose compute unit—it is optimized for inference.

Why Apple Doesn’t Need CUDA

Apple replaces CUDA with three tightly integrated components, used together.

① Metal: Apple’s GPU Compute API

Metal is Apple’s low-level GPU API:

- Functionally similar to CUDA

- Designed exclusively for Apple hardware

- Deeply integrated with macOS and iOS

👉 For GPU parallel computing, Apple uses Metal, not CUDA.

② Core ML: Automatic Model Optimization

Core ML acts as a translation and optimization layer:

- Converts models into Apple-optimized formats

- Automatically decides whether execution runs on:

- CPU

- GPU

- Neural Engine

📌 Developers do not need to manage hardware placement manually.

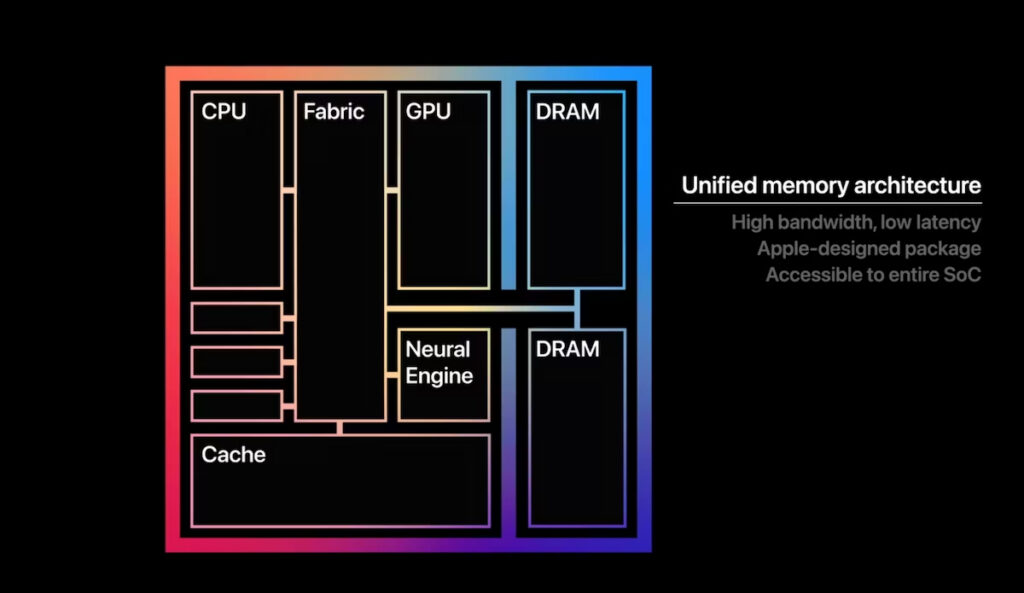

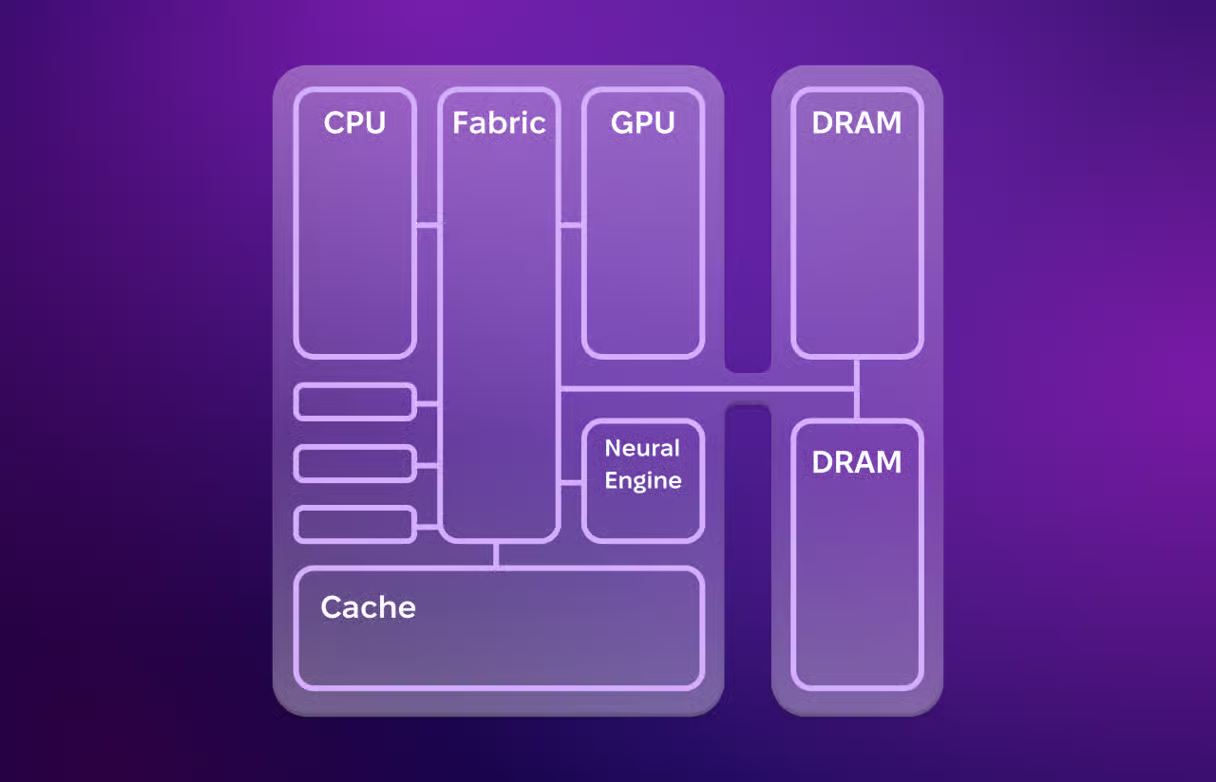

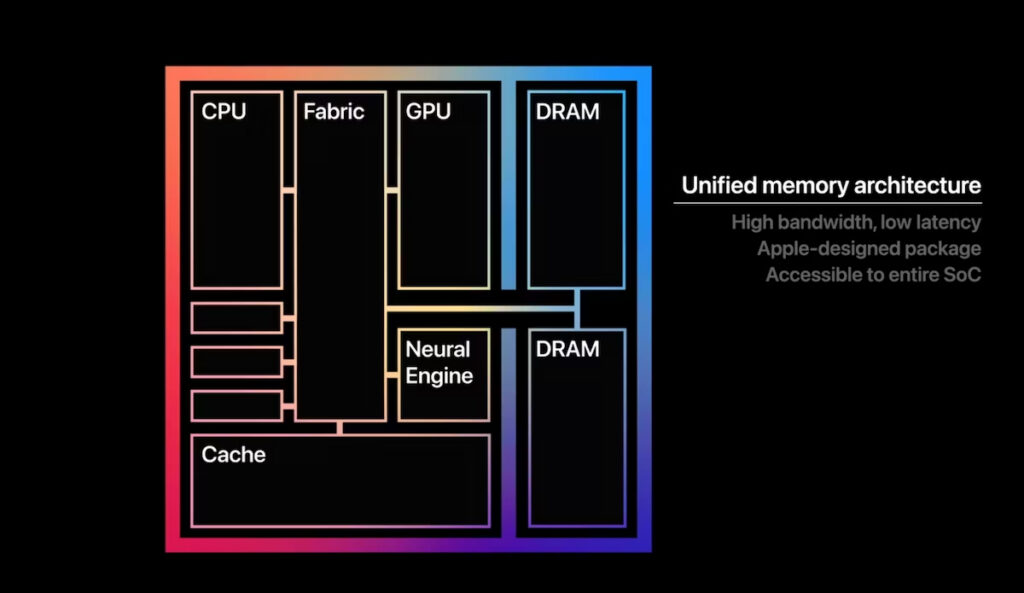

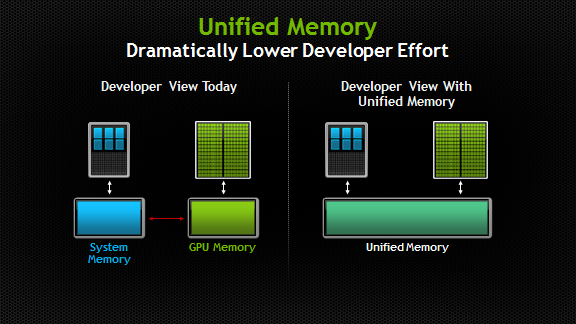

③ Unified Memory Architecture: A Major Advantage

Apple uses Unified Memory Architecture (UMA):

- CPU, GPU, and NPU share the same memory pool

- No expensive memory copying

- Lower latency and lower power consumption

👉 This is especially beneficial for AI inference.

Can Apple M-Series Chips Train Large Models?

Realistically:

They are not designed for that purpose.

M-Series Chips Are Well-Suited For:

- AI inference

- Fine-tuning smaller models

- Edge AI

- Personal AI assistants

They Are Not Ideal For:

- Training very large models

- Multi-GPU distributed training

- Data-center-scale workloads

👉 That remains the primary domain of NVIDIA GPUs with CUDA.

Quick Comparison Table

| Aspect | Apple M-Series | NVIDIA + CUDA |

|---|---|---|

| Primary focus | On-device AI | Large-scale training |

| Power efficiency | Extremely high | Lower |

| Memory model | Unified memory | Discrete VRAM |

| GPU API | Metal | CUDA |

| AI acceleration | Neural Engine | Tensor Cores |

| Best use case | Inference, personal AI | Training, data centers |

Is Not Using CUDA a Disadvantage for Apple?

No.

It is a strategic choice, not a technical limitation.

- NVIDIA prioritizes scale and training performance

- Apple prioritizes efficiency and user experience

They are solving different problems.

Final Summary

Apple M-series chips do not use CUDA because Apple built a vertically integrated AI stack—combining Metal, Core ML, Neural Engine, and unified memory—to optimize on-device AI.

One Line to Remember

CUDA solves “how to compute more,”

Apple solves “how to compute better on your device.”