As local Large Language Models (LLMs) become more popular, many people ask:

“Is my Apple M-series Mac actually suitable for running local LLMs?”

The answer is not simply yes or no.

It depends on what you want to do, how large the model is, and how you plan to use it.

This article evaluates Apple M-series chips from three practical angles:

- Hardware architecture

- Memory constraints

- Real-world usage scenarios

Short Answer (Key Takeaway)

Apple M-series chips are well suited for local LLM inference and lightweight usage,

but they are not designed for large-scale model training or high-concurrency deployment.

If you treat an M-series Mac as:

- A personal AI assistant

- A development or testing environment

- A lightweight RAG or inference platform

👉 It can be an excellent experience.

What Do We Mean by “Local LLM”?

In practice, a local LLM usually means:

- The model runs entirely on your own machine

- No reliance on cloud APIs

- Common model sizes:

- 7B

- 8B

- 13B (quantized)

📌 The real question is not “What’s the biggest model I can load?”

📌 It’s “Can it run smoothly, reliably, and for long periods?”

Three Major Advantages of Apple M-Series for Local LLMs

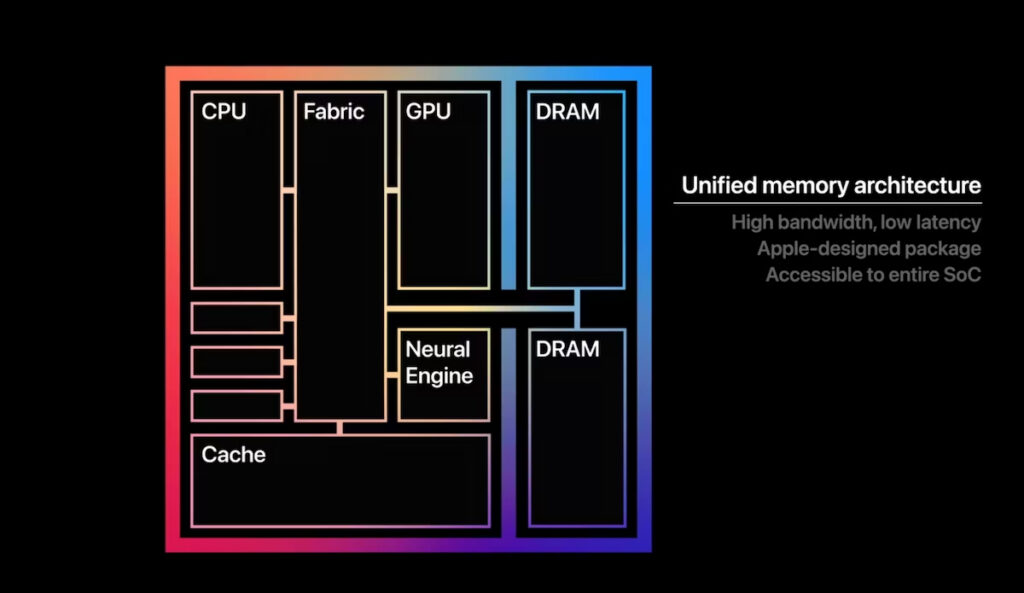

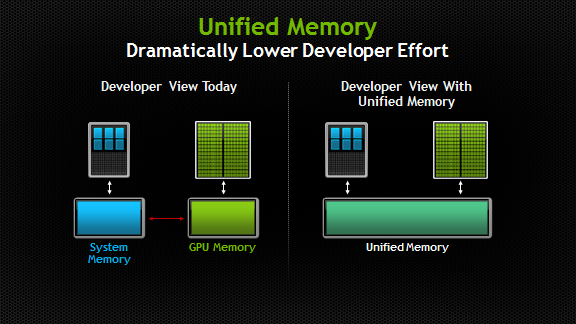

① Unified Memory Architecture: A Big Win

Apple M-series chips use Unified Memory Architecture (UMA):

- CPU, GPU, and Neural Engine share the same memory pool

- No RAM ↔ VRAM copying

- Lower latency and simpler memory management

👉 For local LLMs, being able to fit the model in memory matters more than raw GPU core count.

② Exceptional Power Efficiency

One of the strongest advantages of Apple M-series chips is efficiency:

- Low power consumption

- Minimal heat generation

- Sustained performance without aggressive throttling

📌 In real usage:

- A MacBook Pro can run local LLM inference continuously

- Fans remain quiet

- Battery drain is predictable

👉 This is an experience desktop GPUs simply do not offer.

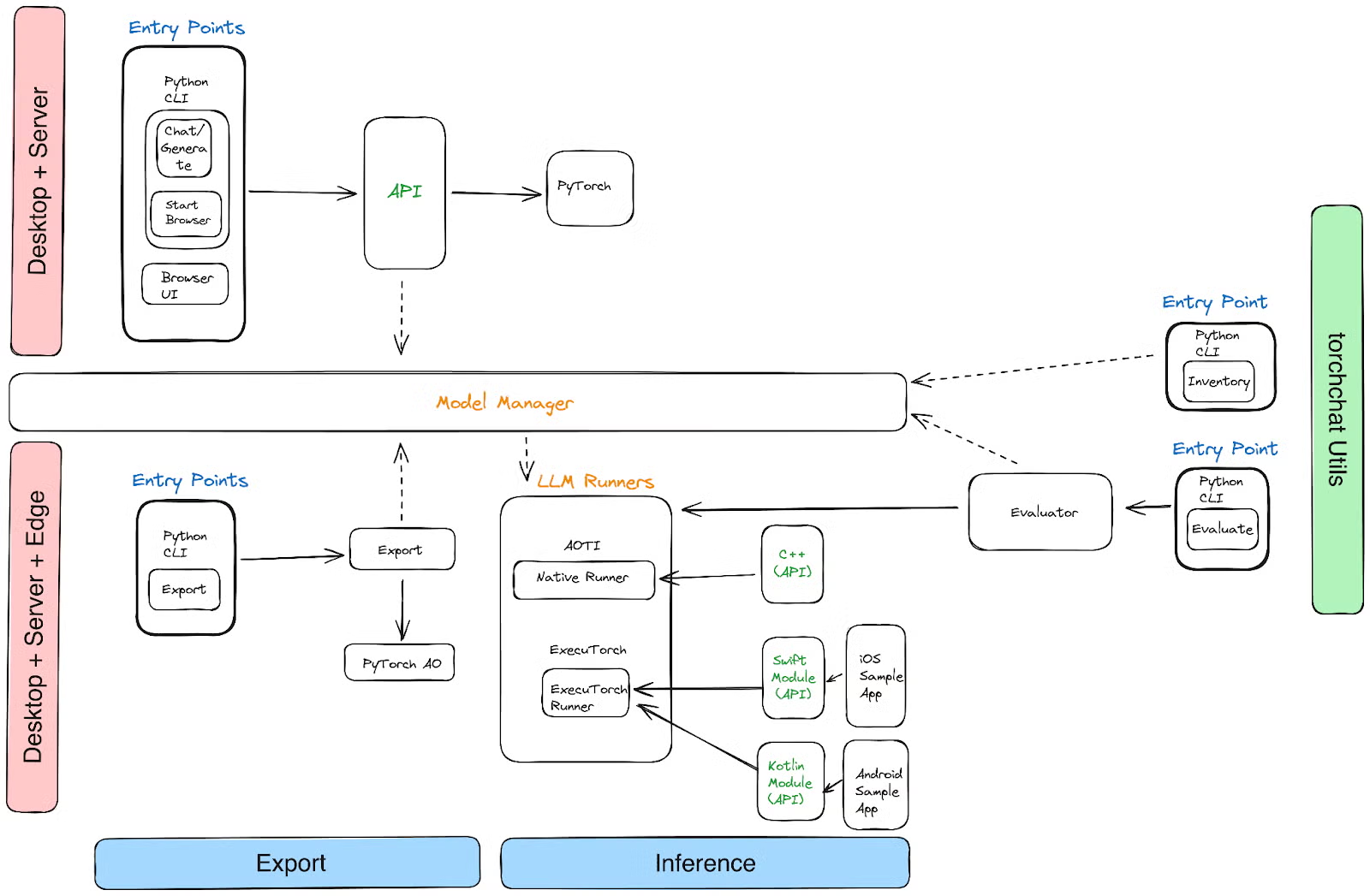

③ A Mature Inference Ecosystem

On macOS, local LLM tooling is already quite mature:

llama.cppwith Metal backend- Apple’s MLX framework

- Ollama with native macOS support

👉 Inference on Apple M-series is not a technical obstacle anymore.

Where Are the Real Limitations?

This is the most important section.

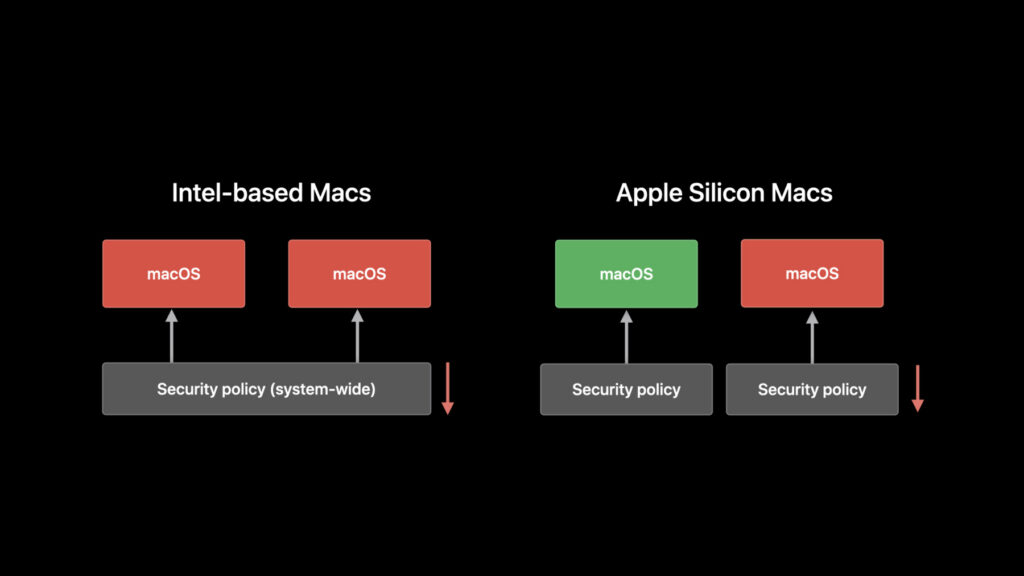

❌ 1️⃣ GPU Compute Is Not Designed for Large-Scale Training

Apple GPUs prioritize:

- Energy efficiency

- Integrated system performance

They are not optimized for:

- Very large models

- Long training runs

- Multi-GPU scaling

👉 Training large LLMs is outside the intended design scope.

❌ 2️⃣ Neural Engine Offers Limited Benefits for LLMs

Apple’s Neural Engine (NPU):

- Excels at vision and speech models

- Is highly efficient for specific workloads

However, for general Transformer-based LLMs:

- Support is limited

- Most execution still happens on the GPU

👉 LLMs currently gain little direct benefit from the NPU.

❌ 3️⃣ Memory Is Not Upgradeable

Apple M-series systems have:

- Soldered memory

- No post-purchase upgrades

For LLM usage:

- 16 GB → very small models only

- 32 GB → comfortable for 7B / 8B models

- 64 GB / 96 GB → feasible for 13B (quantized)

👉 Choosing the wrong memory size is the most expensive mistake.

Apple M-Series vs NVIDIA GPU (Local LLM Perspective)

| Aspect | Apple M-Series | NVIDIA GPU |

|---|---|---|

| Primary role | Inference, personal use | Training, large models |

| Memory model | Unified (major advantage) | Discrete VRAM |

| Power efficiency | Extremely high | Low |

| CUDA support | ❌ | ✅ |

| LLM training | Not suitable | Excellent |

| Local usability | Very user-friendly | Engineering-focused |

When Apple M-Series Is a Great Choice

✔️ Apple M-series is ideal if you:

- Want a local conversational LLM

- Build RAG or document-QA systems

- Value silence, low power, and mobility

- Use AI as a productivity tool, not infrastructure

When Apple M-Series Is the Wrong Tool

❌ It is not ideal if you:

- Train large models

- Run multi-user inference services

- Chase maximum tokens/sec

- Plan to scale compute capacity over time

👉 In those cases, NVIDIA GPUs with CUDA are the correct solution.

One Sentence to Remember

Apple M-series chips are not “compute monsters”—they are “local AI experience machines.”

Final Conclusion

Apple M-series chips are very well suited for local LLM inference and applications,

but they should not be treated as a primary platform for AI training or server workloads.

If your goals include:

- Personal AI assistants

- Local knowledge bases

- Low-noise, low-power AI tools

👉 Apple M-series hardware delivers an excellent user experience.