When teams adopt AI, they often make one critical mistake:

“We’re building AI—let’s design the system like a training cluster.”

The result is predictable:

- Overpowered GPUs running at low utilization

- VRAM exhaustion when context grows

- Unstable latency and poor user experience

- High cost with little real benefit

The problem isn’t the technology—it’s the architecture mindset.

👉 Inference-first AI systems must be designed very differently from training systems.

One-Sentence Takeaway

An inference-first AI architecture is not about maximizing compute—it’s about stability, low latency, memory efficiency, and operability.

A Critical Premise: You’re Not “Building” the Model

In inference-first scenarios:

- The model is already trained

- No backpropagation is needed

- Peak FLOPS are not the priority

What you’re really doing is:

Turning a trained model into a reliable, always-on service.

Five Core Principles of Inference-First Architecture

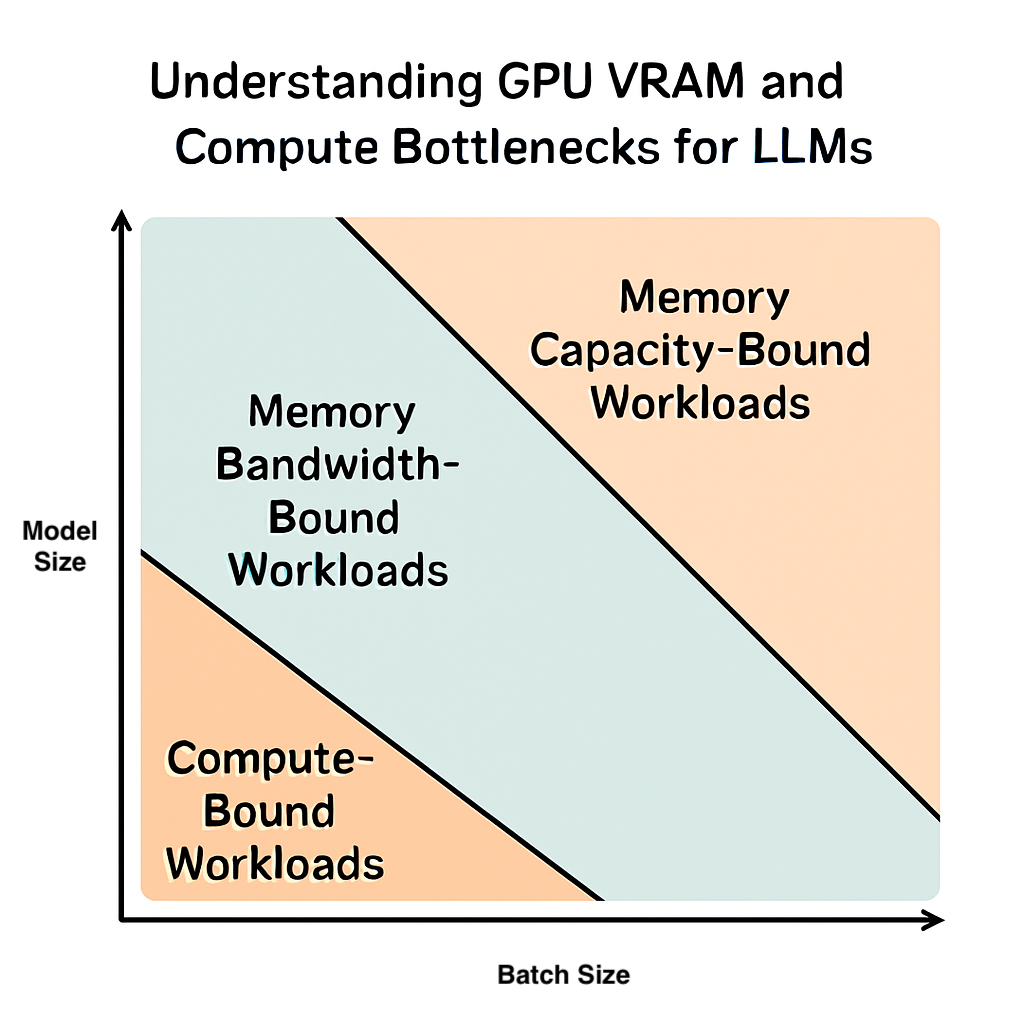

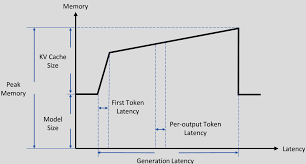

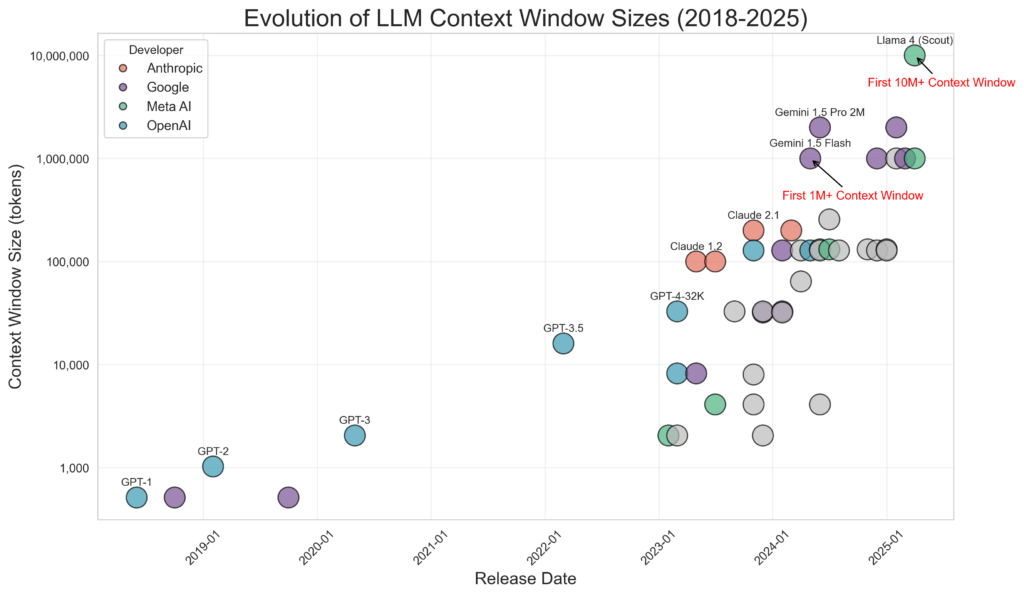

Principle 1: Memory Comes Before Compute

The first question in inference design is always:

Can the model fully fit in memory—consistently?

Design considerations

- VRAM or unified memory capacity

- Model size (including KV cache and context window)

- Reserved headroom for runtime buffers

📌 Insufficient compute makes inference slow; insufficient memory makes it impossible.

Principle 2: Latency Stability Beats Peak Speed

Inference is not a benchmark contest.

What matters in production:

- Consistent response times

- P95 / P99 latency

- Predictable user experience

📌 A system that averages 50 ms but occasionally spikes to 500 ms

feels worse than one that is consistently 120 ms.

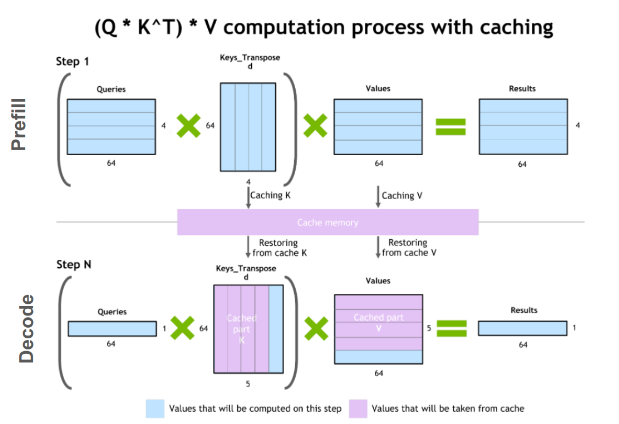

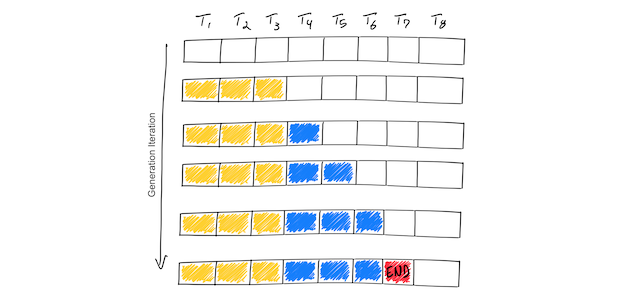

Principle 3: Context and KV Cache Must Be Actively Managed

A common mistake in inference systems:

Treating context length as “free.”

It isn’t.

Architectural decisions you must make:

- Maximum context length

- Truncation strategies

- Summarization of long histories

- Chunking and retrieval boundaries

👉 Unmanaged context growth will eventually exhaust VRAM.

Principle 4: One Request ≠ Unlimited Concurrency

A dangerous assumption:

“If the model runs once, it can run for everyone.”

Reality:

- Each request consumes its own KV cache

- VRAM usage scales with concurrency

- Parallel requests are not free

Practical design approaches

- Enforce maximum concurrency limits

- Introduce request queues

- Use dynamic batching where appropriate

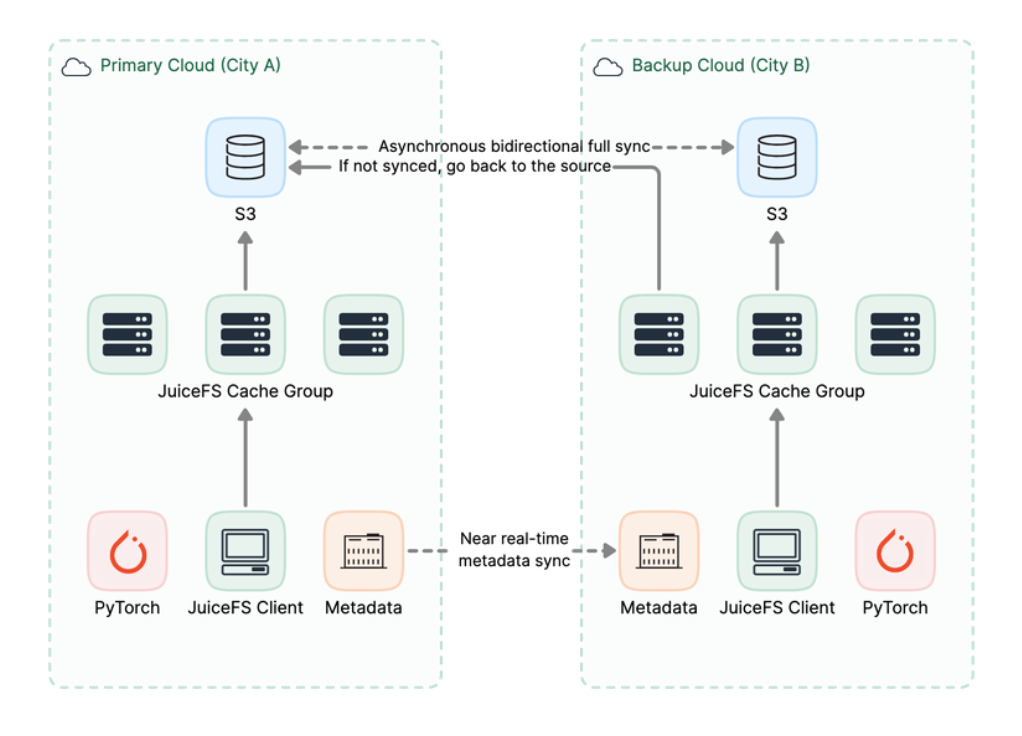

Principle 5: Inference Is a Long-Running Service

Inference systems typically:

- Run 24/7

- Serve users continuously

- Must recover gracefully from failures

Essential operational components

- Health checks

- VRAM and memory monitoring

- OOM protection and limits

- Graceful restarts

👉 Operational stability matters more than peak throughput.

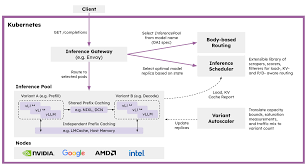

A Typical Inference-First AI Architecture

A common inference-first setup includes:

- API Gateway

- Authentication

- Rate limiting

- Traffic control

- Inference Service

- Resident model in memory

- Context and KV cache management

- Concurrency control

- Memory / VRAM Pool

- Persistent model residency

- Avoid repeated model loading

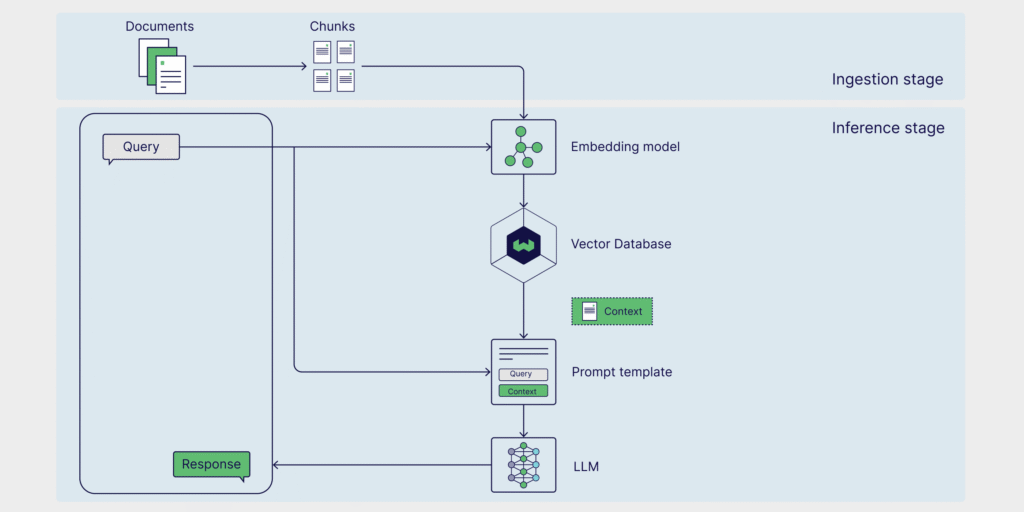

- Optional: RAG Layer

- Vector database

- Context assembly

- Monitoring & Observability

- Latency metrics

- VRAM usage

- Error rates

Inference-First vs Training-First: Design Comparison

| Dimension | Inference-First | Training-First |

|---|---|---|

| Primary goal | Stable service | Model learning |

| Critical resource | Memory | Compute |

| GPU utilization | Moderate | Maximal |

| Latency sensitivity | Extremely high | Low |

| System type | Long-running service | Batch jobs |

| Cost model | Ongoing operations | Upfront investment |

Common Architecture Mistakes to Avoid

❌ Using training-grade GPUs as inference servers

❌ Allowing unlimited context growth

❌ No concurrency limits

❌ No VRAM observability

❌ Reloading models per request

One Concept to Remember

An inference-first AI system is fundamentally a memory-constrained, latency-sensitive service—not a compute benchmark.

Final Conclusion

If 80% of your AI workload is inference, then 80% of your architecture should be designed for inference—not training.

That means:

- You don’t need the most powerful GPU

- You need predictable performance

- You need systems that can run forever without breaking