When evaluating AI solutions, a common question inevitably comes up:

“Should we build a local LLM, or is using the cloud cheaper?”

The answer is not simply “buy hardware” or “use APIs.”

The real issue is this:

👉 Are you paying a one-time investment, or a cost that burns money every single day?

One-Sentence Takeaway

Local LLMs become clearly cheaper when usage is frequent, long-running, and based on internal data.

If your usage is:

- Occasional

- Experimental

- Uncertain

👉 The cloud is almost always cheaper.

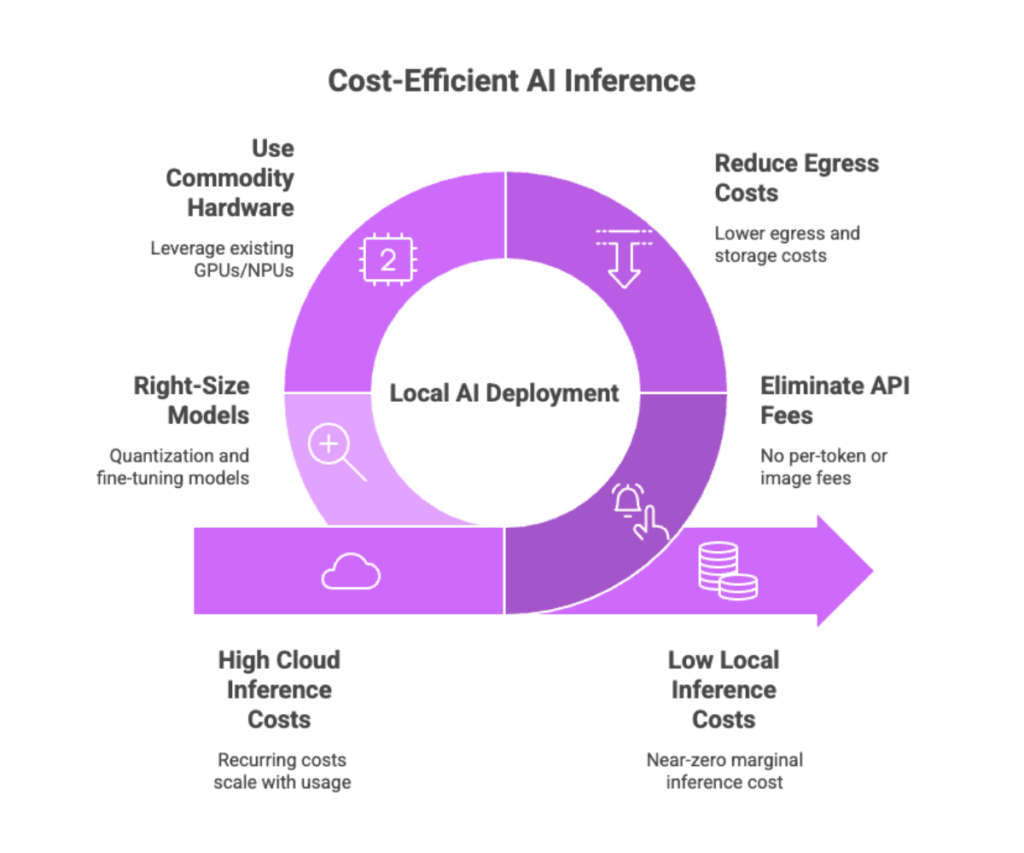

Step One: Understand the Two Cost Models

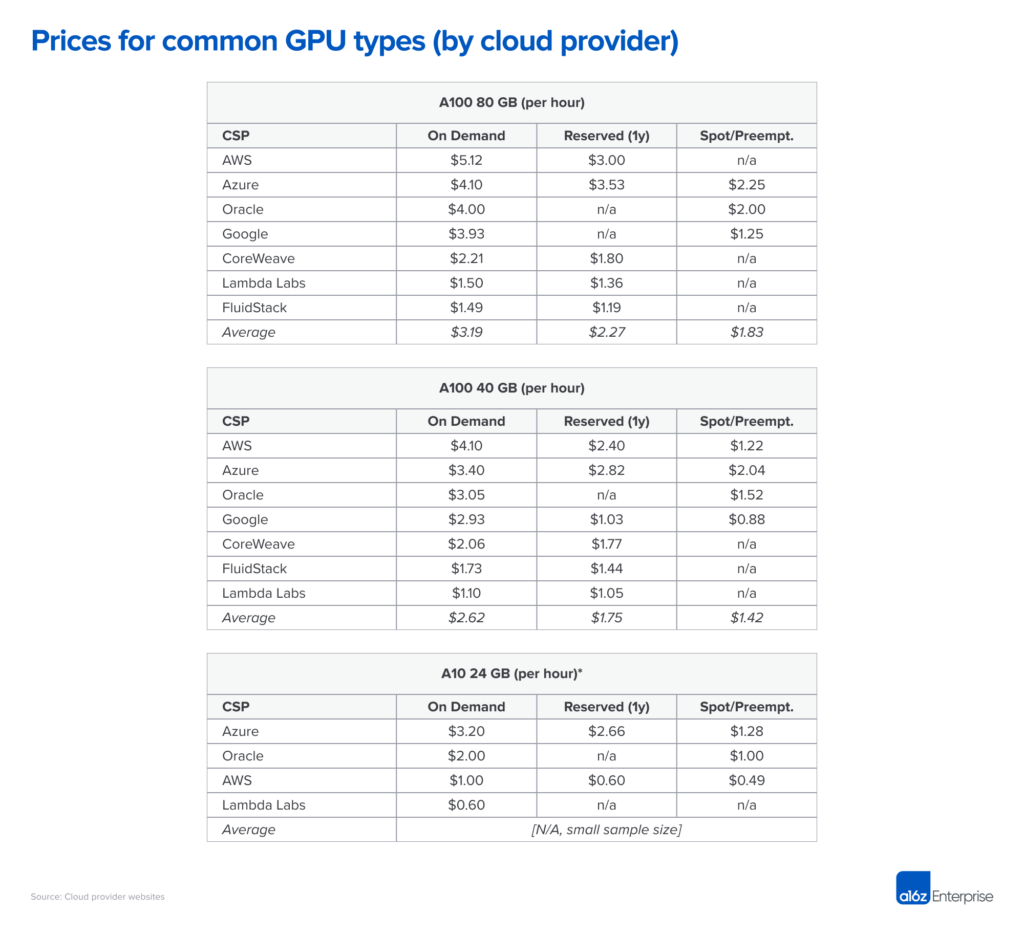

☁️ Cloud LLM Cost Model: Ongoing Rent (OPEX)

Cloud costs usually include:

- API token usage

- GPU inference hours

- Persistent VRAM allocation

- Network traffic

Characteristics:

- Pay more as usage increases

- Easy to start, hard to predict long-term

- Costs quietly compound over time

📌 Cloud AI is an operational expense (OPEX).

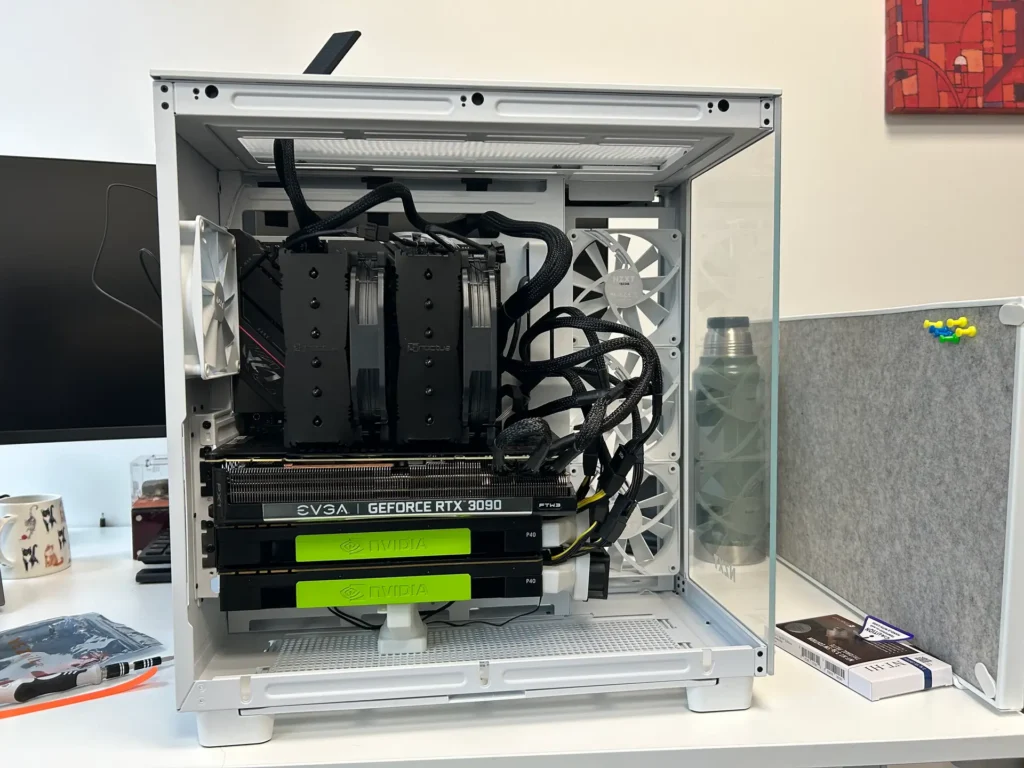

🖥️ Local LLM Cost Model: Upfront Investment (CAPEX)

Local LLM costs typically include:

- One-time hardware purchase (GPU / server)

- Electricity

- Minimal ongoing maintenance

Characteristics:

- Higher initial cost

- Marginal cost per query approaches zero

- Gets cheaper the longer you use it

📌 Local AI is a capital expense (CAPEX).

The Key Question Is Not “Which Is Cheaper?”—It’s “How Long Will You Use It?”

A Critical Mindset Shift

Local LLMs don’t save money in the first month.

They save money in the second year.

When Local LLMs Start Beating the Cloud

Based on real-world deployments, there are five major tipping points.

① You Use AI Every Day

If your AI system is:

- Used daily

- Queried multiple times per day

- Shared across teams or departments

Then cloud costs quickly turn into a fixed monthly bill.

👉 This is the first strong signal that local LLMs make sense.

② Inference Is a Persistent Service, Not a One-Off Task

The cloud excels at:

- Short-lived training jobs

- Occasional API calls

But if your LLM:

- Runs 24/7

- Waits for users to ask questions

- Must respond immediately

Then you are effectively renting GPUs long-term.

📌 Long-term rental is rarely cheap.

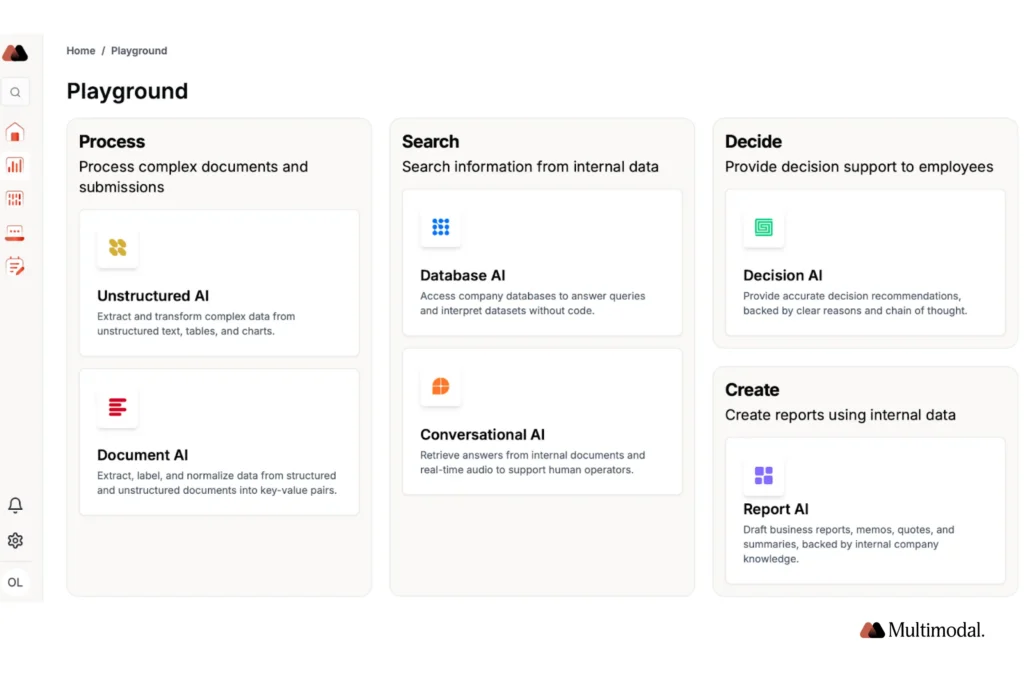

③ Your Data Is Internal or Sensitive

If your AI works with:

- Internal documents

- Contracts, legal data

- ERP, HR, or operational systems

Even if the cloud is technically possible, it often requires:

- Security reviews

- Legal agreements

- Data retention concerns

👉 Local LLMs are often cheaper and simpler from a risk perspective.

④ User Count Is Stable, Not Explosive

Local LLMs work best when:

- User count is predictable

- 10, 20, or 50 users

- No sudden spikes to thousands of users

📌 Because:

- Cloud costs scale with usage

- Local costs scale very slowly with users

👉 Predictability favors local deployment.

⑤ You Know This Will Be a Long-Term Tool

If you already believe:

- This is not a PoC

- It will be used for 2–3 years or more

- It will become a daily productivity tool

Then you’re making a long-term investment, not a short-term experiment.

When the Cloud Is Still the Cheaper Choice

Be honest—cloud is usually better if you are:

☁️ Cloud-favored scenarios:

- Low-frequency or occasional use

- Proof-of-concept or demos

- Highly uncertain usage patterns

- Need to launch immediately

- Do not want to manage hardware

📌 The cloud is ideal for uncertainty and speed.

A Practical Decision Table

| Question | Favors Cloud | Favors Local |

|---|---|---|

| Usage frequency | Occasional | Daily |

| Inference pattern | Sporadic | Persistent |

| User count | Uncertain | Stable |

| Data sensitivity | Public / low | Internal / sensitive |

| Cost preference | Small monthly fees | One-time investment |

| Expected lifespan | < 1 year | ≥ 2 years |

👉 The more checks on the right, the stronger the case for local LLMs.

One Sentence to Remember

The cloud charges you for uncertainty.

Local LLMs reward certainty.

Final Conclusion

Local LLMs are not cheaper at the beginning—but they are often cheaper in high-frequency, long-term, internal-use scenarios.

The real question isn’t:

- “Is local cheaper than cloud?”

It’s:

- “Have we reached the stage where local deployment makes economic sense?”