When organizations adopt LLMs, one question almost always appears early:

“Should we use RAG, or should we fine-tune the model?”

This question is often misunderstood because many assume RAG and fine-tuning are alternatives.

They are not.

👉 RAG and fine-tuning solve fundamentally different problems.

This article explains the difference in plain terms—so you don’t waste time, money, or infrastructure.

One-Sentence Takeaway

RAG solves the problem of missing information.

Fine-tuning solves the problem of incorrect behavior.

Once you separate these two, the right choice becomes obvious.

First, Understand What Each One Actually Does

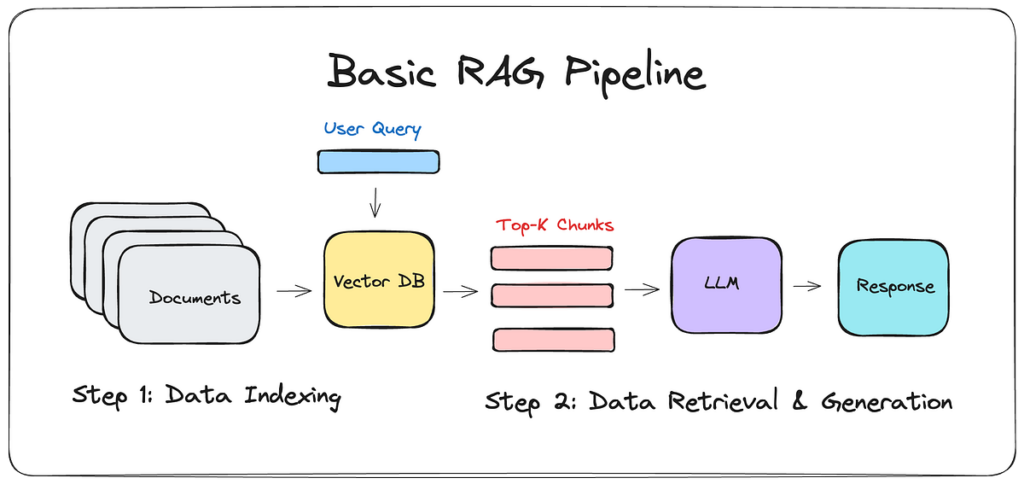

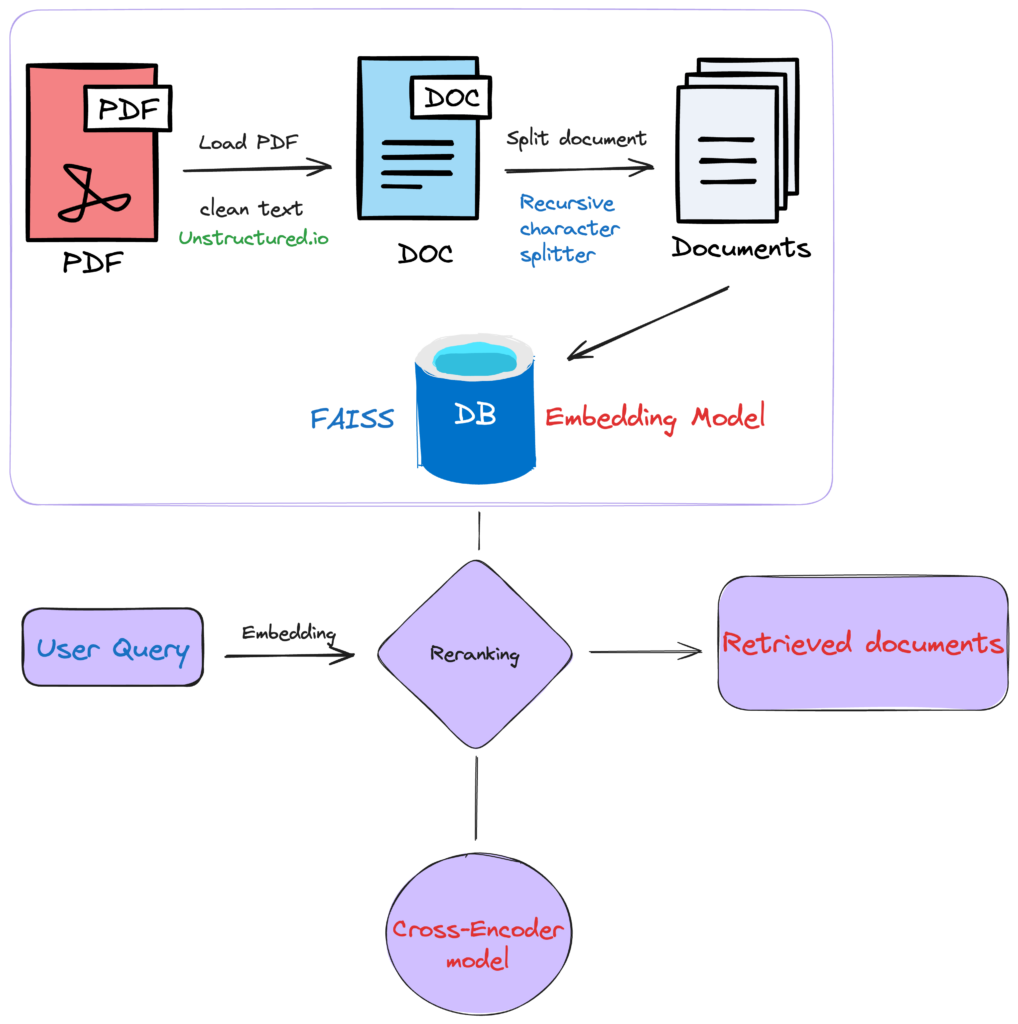

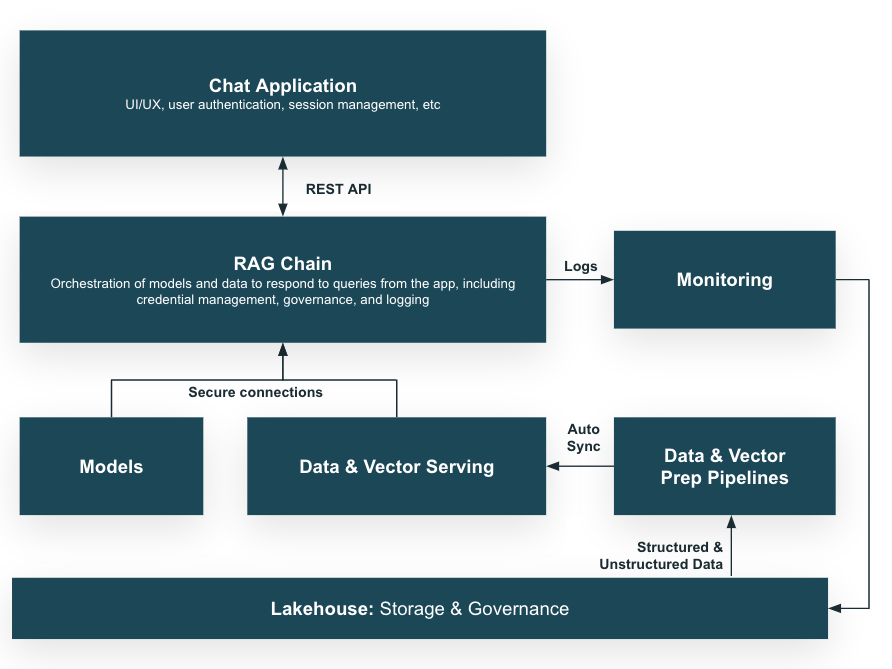

🔎 RAG (Retrieval-Augmented Generation)

- Does not modify the model

- Does not change weights

- Retrieves relevant data at runtime

- Affects only the current response

👉 RAG is real-time data injection during inference.

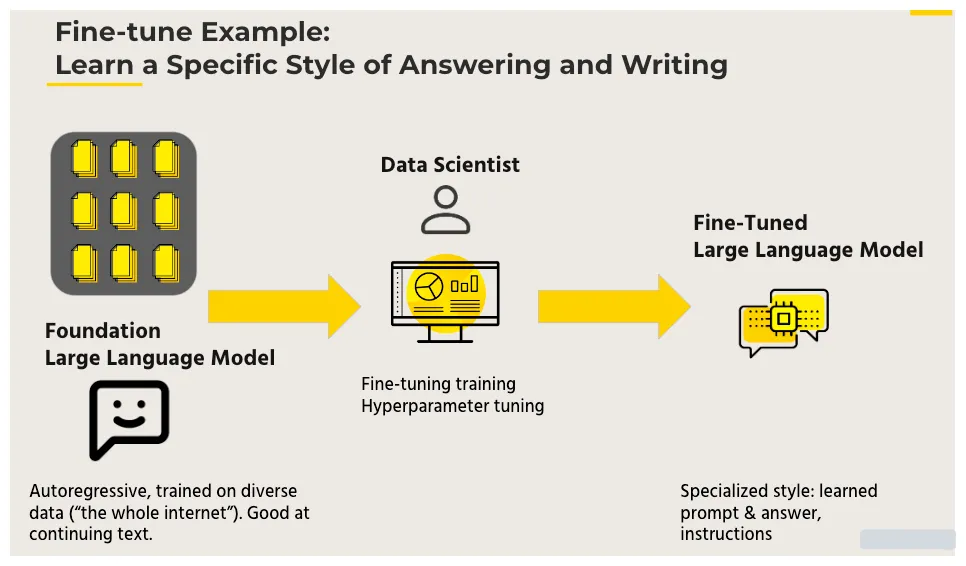

🧠 Fine-Tuning

- Modifies model weights

- Requires training

- Knowledge becomes embedded in the model

- Affects all future responses

👉 Fine-tuning changes model behavior at the training layer.

A Simple Mental Model

RAG is like being allowed to look up reference materials during an exam.

Fine-tuning is like memorizing how to solve the problems.

- Changing information → look it up

- Stable skills → memorize them

When You Should Definitely Use RAG

Typical RAG use cases

- Internal company documents

- SOPs, policies, contracts

- ERP / EIP / operational knowledge

- Regulations and compliance material

- Frequently changing data

📌 Why?

- You don’t want to retrain every time a document changes

- Updates must take effect immediately

- Data must be removable and auditable

👉 This type of knowledge should never be baked into model weights.

When Fine-Tuning Makes Sense

Typical fine-tuning use cases

- Writing tone and style

- Fixed response formats

- Repetitive task workflows

- Domain-specific language usage

- Structured tasks (classification, extraction, summarization)

📌 These share key properties:

- Stable over time

- Consistent

- Represent skills, not facts

👉 Skills are worth training. Facts are not.

What Happens If You Use Fine-Tuning Instead of RAG?

This is where teams often make expensive mistakes.

❌ Problem 1: Exploding Costs

- Training requires GPUs

- Every document update triggers retraining

- Costs scale with document volume

👉 RAG exists specifically to avoid this.

❌ Problem 2: Irreversible or Outdated Knowledge

- Old documents become permanently embedded

- Deleting or correcting knowledge is difficult or impossible

- Legal and compliance risks increase

👉 Inference-layer RAG allows instant removal of data.

❌ Problem 3: Worse Results Than Expected

- Inconsistent document quality introduces noise

- Errors become permanent

- Debugging is extremely difficult

👉 RAG keeps mistakes temporary, not permanent.

Can You Use Only RAG Without Fine-Tuning?

Yes—and often that’s enough.

However, if you notice:

- The model misunderstands instructions

- Output format is inconsistent

- Reasoning steps are unreliable

👉 Then the problem is not missing data—it’s behavior.

That’s where fine-tuning helps.

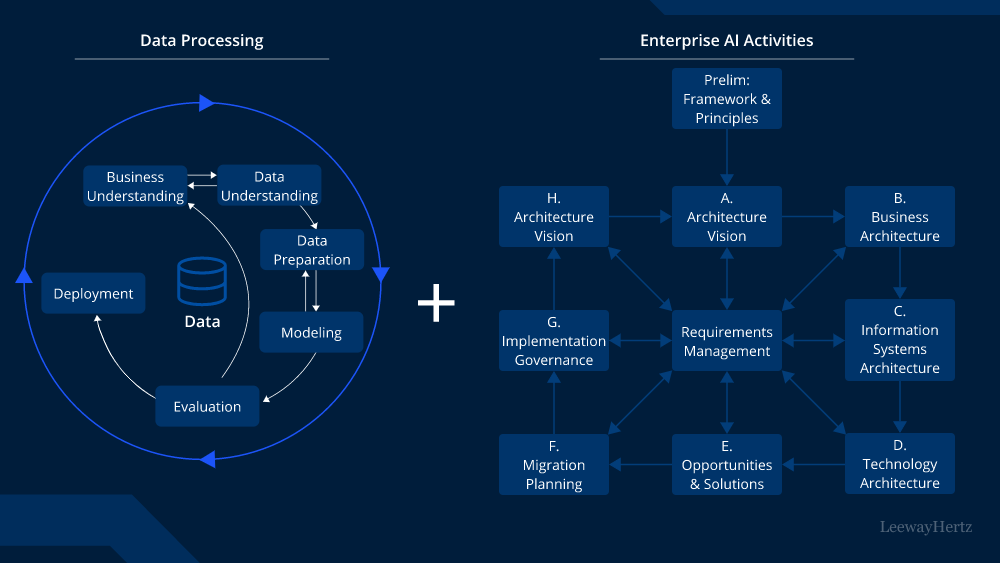

The Best Real-World Approach: Use Both (Correctly)

The proper division of labor

- Fine-Tuning

- Teaches how to respond

- Controls format, tone, logic, workflows

- RAG

- Supplies what information to use

- Documents, policies, facts, references

👉 Behavior comes from training. Knowledge comes from retrieval.

A Quick Decision Table

| Problem Type | RAG | Fine-Tuning |

|---|---|---|

| Frequently changing data | ✅ | ❌ |

| Immediate updates needed | ✅ | ❌ |

| Inconsistent output format | ❌ | ✅ |

| Task-specific skills | ❌ | ✅ |

| Compliance / removability | ✅ | ❌ |

| Cost predictability | ✅ | ❌ |

One Sentence to Remember (Critical)

Never use fine-tuning to solve a data problem.

Never use RAG to solve a behavior problem.

Final Conclusion

RAG and fine-tuning are not a binary choice—they are complementary tools.

- Data problems → RAG

- Behavior problems → Fine-tuning

- Enterprise-grade systems → both, used correctly

Follow this rule, and you’ll avoid most architectural mistakes before they happen.