When planning an AI system, a common first question is:

“Should we deploy this in the cloud, or on-prem?”

But this question is missing a crucial step.

The real question should be:

👉 Is your AI workload primarily training or inference?

Because training and inference often lead to completely different answers when choosing between cloud and on-prem.

One-Sentence Takeaway

The first decision in AI architecture is training vs inference.

Only after that should you decide between cloud and on-prem.

If you reverse the order, you’ll almost certainly choose the wrong setup.

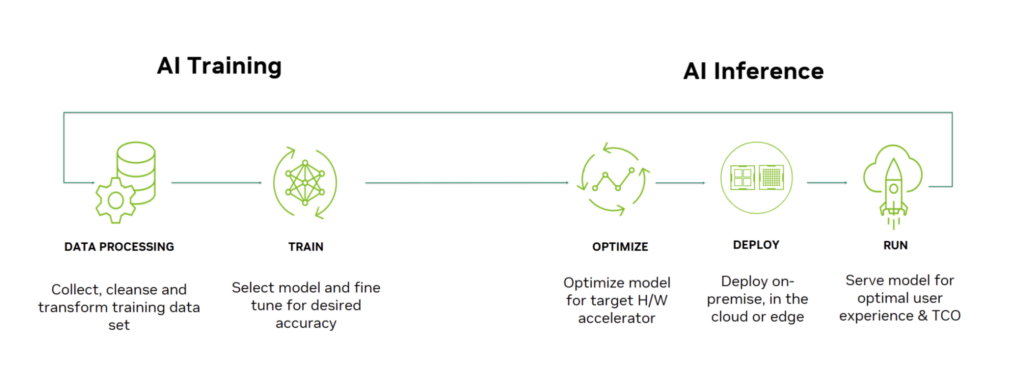

First, Clarify the Two Workloads

🧠 AI Training

- Purpose: Teach the model, update weights

- Characteristics:

- Extremely compute-intensive

- Short-term but bursty workloads

- Can shut down once training finishes

- Cost profile:

- Compute-driven

- Pay for peak power, briefly

💬 AI Inference

- Purpose: Use the trained model

- Characteristics:

- Long-running, always-on

- Highly sensitive to latency and stability

- Memory capacity often more important than raw compute

- Cost profile:

- Operations-driven

- Continuous, 24/7 cost

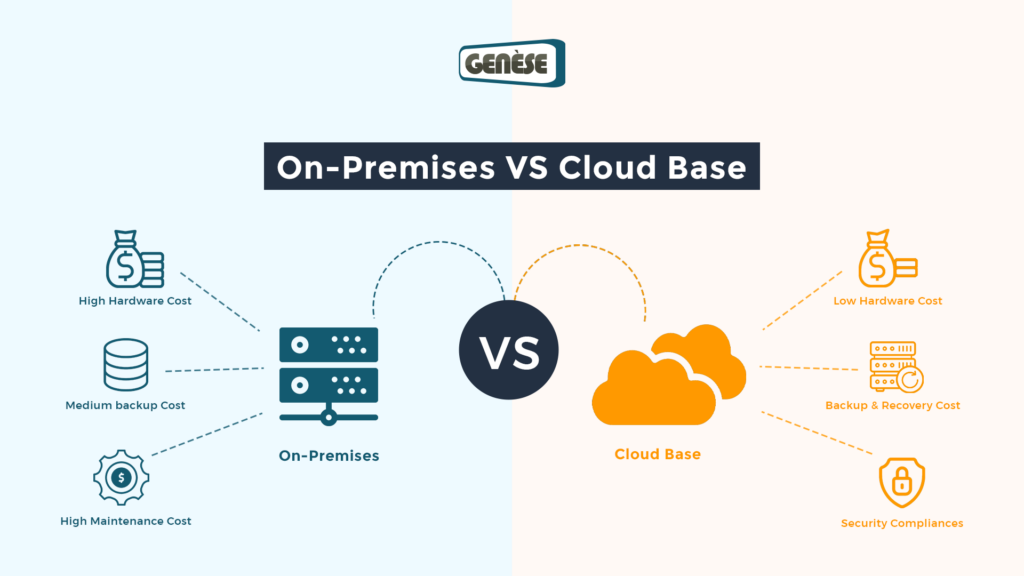

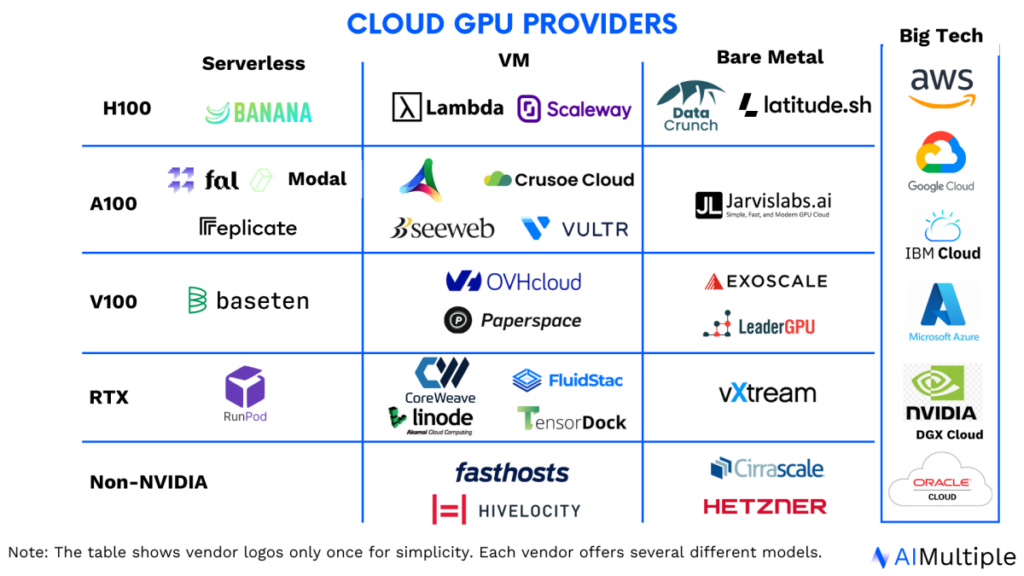

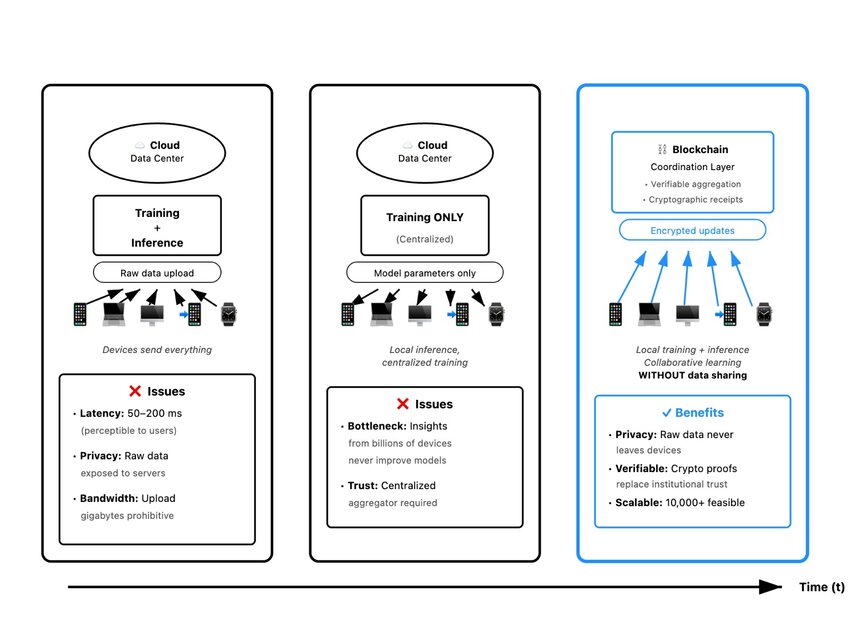

Why Training Is Usually Better in the Cloud

What Training Really Needs

- Very powerful GPUs

- Multi-GPU or multi-node scaling

- High power and cooling for limited periods

Why the Cloud Fits Training Well

- Rent top-tier GPUs only when needed

- No need to manage power, cooling, or hardware failures

- Release resources immediately after training completes

📌 For training, the cloud acts as a flexible compute pool.

When “Training + Cloud” Makes the Most Sense

- Training happens occasionally, not constantly

- Model sizes and architectures change frequently

- You want access to the latest GPU generations

- You want to avoid hardware depreciation risk

👉 For most organizations, cloud-based training is the least risky option.

Why Inference Often Belongs On-Prem

What Inference Really Needs

- Models resident in memory

- Predictable, low latency

- Long-term stability

- Cost predictability

Why On-Prem Works Well for Inference

- One-time hardware investment

- No perpetual GPU rental fees

- Lowest possible latency

- Sensitive data stays inside the network

📌 For inference, on-prem is a long-running service platform.

When “Inference + On-Prem” Is the Best Choice

- High-frequency or daily usage

- Latency-sensitive applications

- Internal or confidential data

- Desire for predictable long-term costs

👉 At scale, on-prem inference is often cheaper and more stable than cloud inference.

Is Cloud Inference Ever a Good Idea?

Yes—but with clear trade-offs.

Advantages of Cloud Inference

- Fast to deploy

- Easy to scale temporarily

- No hardware management

Hidden Costs of Cloud Inference

- 24/7 GPU rental fees

- VRAM usage billed continuously

- Network latency and variability

- Long-term costs can quietly explode

📌 Once inference becomes a daily service, cloud costs escalate quickly.

Training / Inference × Cloud / On-Prem Matrix

| Workload | Cloud | On-Prem |

|---|---|---|

| Training | ✅ Excellent fit | ❌ Costly, inflexible |

| Inference (high frequency) | ⚠️ Cost risk | ✅ Stable & economical |

| Inference (low frequency) | ✅ Convenient | ⚠️ Underutilized hardware |

| Sensitive data | ⚠️ Requires controls | ✅ Strongest isolation |

| Rapid experimentation | ✅ Ideal | ⚠️ Slower setup |

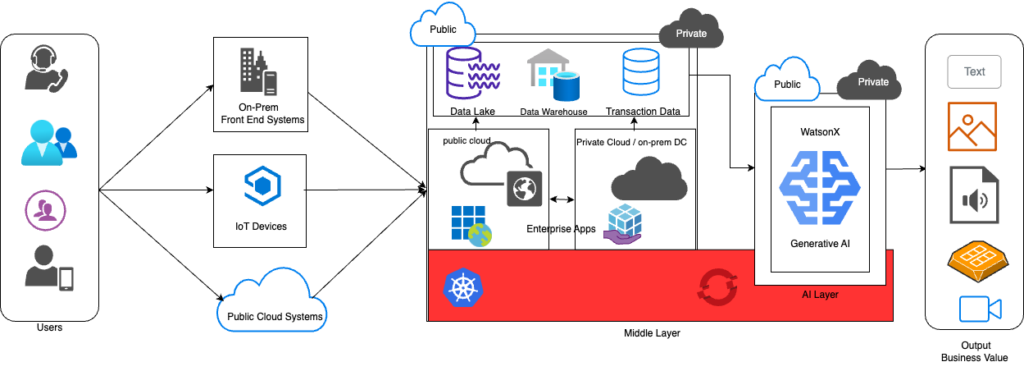

The Most Practical Approach: Hybrid Architecture

Many mature AI teams converge on this model:

Train in the cloud. Run inference on-prem.

Why this works well

- Maximum flexibility for training

- Minimum long-term cost for inference

- Clear separation of responsibilities

- Easier risk and cost control

📌 This is currently the most common real-world architecture.

A Simple Decision Flow (Practical)

Ask these questions in order:

- Am I training models or running inference?

- Is this workload occasional or daily?

- Do I care more about latency, data control, or flexibility?

- Is this cost bursty or continuous?

👉 In most cases, the answer becomes obvious.

One Sentence to Remember

Training optimizes for elastic compute.

Inference optimizes for long-term stability.

Final Conclusion

There is no “cloud-only” or “on-prem-only” rule—only the question of where a specific workload fits best.

- Training → usually cloud

- Inference → often on-prem

- Mature systems → hybrid

Understanding this distinction prevents:

- Overspending on GPUs

- Over-engineering infrastructure

- Surprises in long-term AI costs