A common question in the AI and GPU world is:

“AMD GPUs are powerful too—so why can’t they run CUDA?”

You may also hear comments like:

- “A GPU is a GPU, right?”

- “Is NVIDIA locking down the ecosystem?”

- “Does this mean AMD GPUs are a bad choice for AI?”

This article explains the answer from a technical and ecosystem perspective, without taking sides.

Short Answer (Key Takeaway)

CUDA is an NVIDIA-exclusive parallel computing platform.

AMD GPUs do not support CUDA by design.

This is not about raw performance or hardware capability.

It is about software platforms and ecosystem decisions.

What Is CUDA, and Why Is It Tied to NVIDIA GPUs?

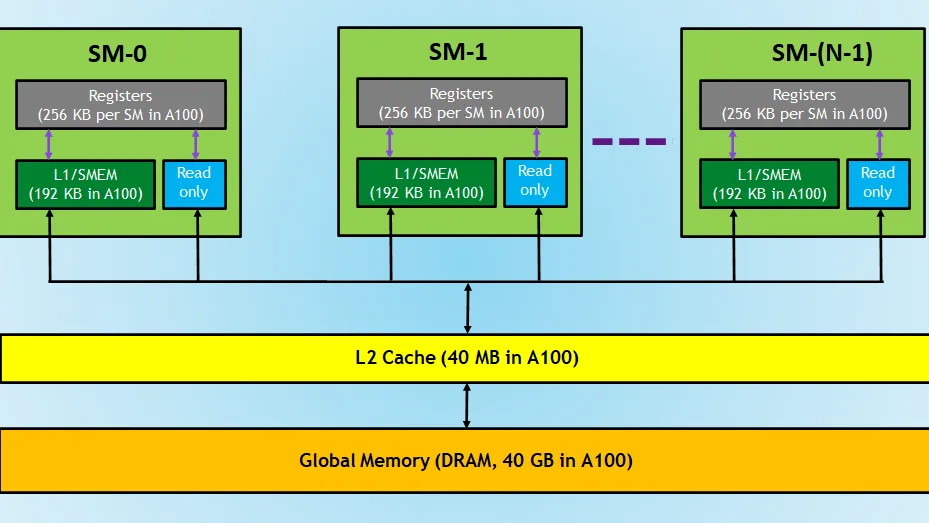

CUDA is a parallel computing platform developed by NVIDIA.

It includes:

- A GPU execution model

- Memory management rules

- A compiler (nvcc), runtime, and toolchain

📌 The critical point:

CUDA was designed from day one to work only with NVIDIA GPUs.

Just like:

- DirectX is closely tied to Microsoft’s ecosystem

- Metal is exclusive to Apple hardware

👉 CUDA is NVIDIA’s proprietary GPU computing platform

Why AMD GPUs Cannot “Just Run CUDA”

Reason 1: CUDA Is Not a Cross-Platform Standard

CUDA is not like:

- C

- Python

- OpenCL

It is a vendor-specific platform with:

- Proprietary APIs

- NVIDIA-only drivers

- NVIDIA-only runtime libraries

👉 AMD GPUs do not include the CUDA runtime and cannot compile CUDA code

Reason 2: AI Frameworks Are CUDA-First

Most major AI frameworks are built with CUDA as the primary backend:

- PyTorch

- TensorFlow

- JAX

This means:

- Documentation focuses on CUDA

- New features often appear on CUDA first

- Performance tuning is optimized for CUDA

AMD GPUs are sometimes supported—but:

Often later, with fewer features, or with extra setup effort

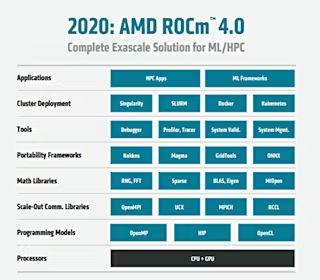

AMD’s Alternative: ROCm

AMD is not ignoring GPU computing.

They developed ROCm (Radeon Open Compute):

- AMD’s GPU compute platform

- Functionally similar to CUDA

- Supports PyTorch and TensorFlow (to a degree)

So why isn’t ROCm as widely used?

Why ROCm Is Still Challenging in Practice

1️⃣ Limited Hardware Support

- Not all AMD GPUs support ROCm

- Consumer GPUs may be partially supported or unsupported

2️⃣ Operating System Constraints

- Linux support is strongest

- Windows support is limited

- Driver and kernel versions are tightly coupled

3️⃣ Smaller Ecosystem

- CUDA has more than a decade of maturity

- ROCm is still catching up

- Fewer tutorials, examples, and community resources

4️⃣ Higher Engineering Cost

- CUDA: often “works out of the box”

- ROCm: requires tuning, testing, and troubleshooting

👉 Engineering effort is a real cost

Is NVIDIA “Locking the Ecosystem”?

This is a common question.

A more balanced explanation is:

- NVIDIA invested early and heavily in CUDA

- Built strong tooling and developer support

- Created a de facto industry standard

📌 The result:

It’s not that AMD didn’t try—CUDA simply has a long head start

Should You Avoid AMD GPUs?

It depends on your use case.

AMD GPUs May Be a Good Fit If:

- Your models are confirmed to work with ROCm

- You use Linux

- You have strong engineering resources

- Budget is a primary concern

AMD GPUs May Not Be Ideal If:

- You are new to AI

- You need fast proof-of-concept results

- Stability and predictability matter

- You plan large-scale or multi-GPU training

One Sentence That Clarifies Everything

AMD GPUs are not slow—CUDA simply wasn’t designed for them.

Final Summary

- CUDA is an NVIDIA-exclusive platform

- AMD GPUs cannot natively run CUDA

- ROCm is AMD’s alternative, but the ecosystem is still maturing

- In AI training, software maturity often matters more than raw hardware specs

One Line to Remember

In the AI world,

hardware is the battlefield,

but CUDA and ROCm define the ecosystem war.

For now, CUDA remains the dominant platform.