In AI discussions, you often hear these two statements together:

- “AI training requires powerful GPUs.”

- “AI inference doesn’t always need a strong GPU.”

This is not a contradiction.

👉 It’s because training and inference are fundamentally different workloads, with very different goals, constraints, and hardware requirements.

This article explains where the difference comes from and why mixing them up leads to poor hardware decisions.

Short Answer (One-Sentence Takeaway)

AI training optimizes for maximum compute throughput,

while AI inference optimizes for efficiency, latency, and stability.

As a result, their GPU requirements point in completely different directions.

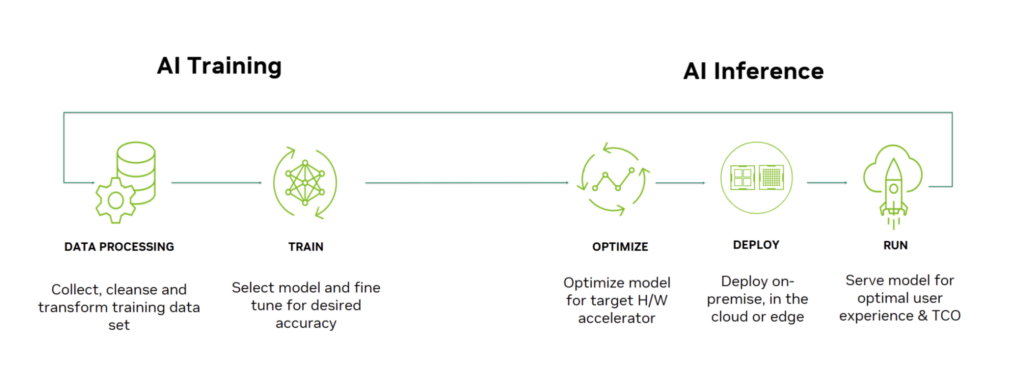

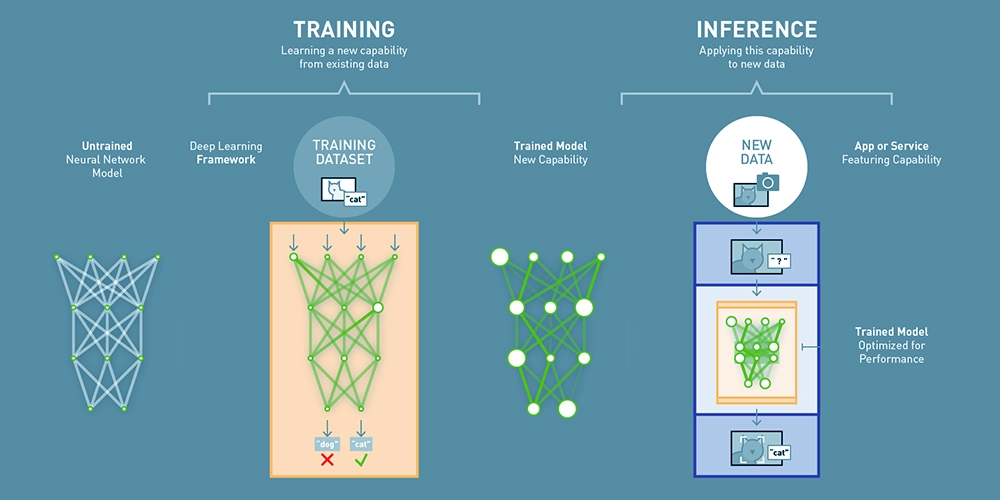

First: Define the Two Phases Clearly

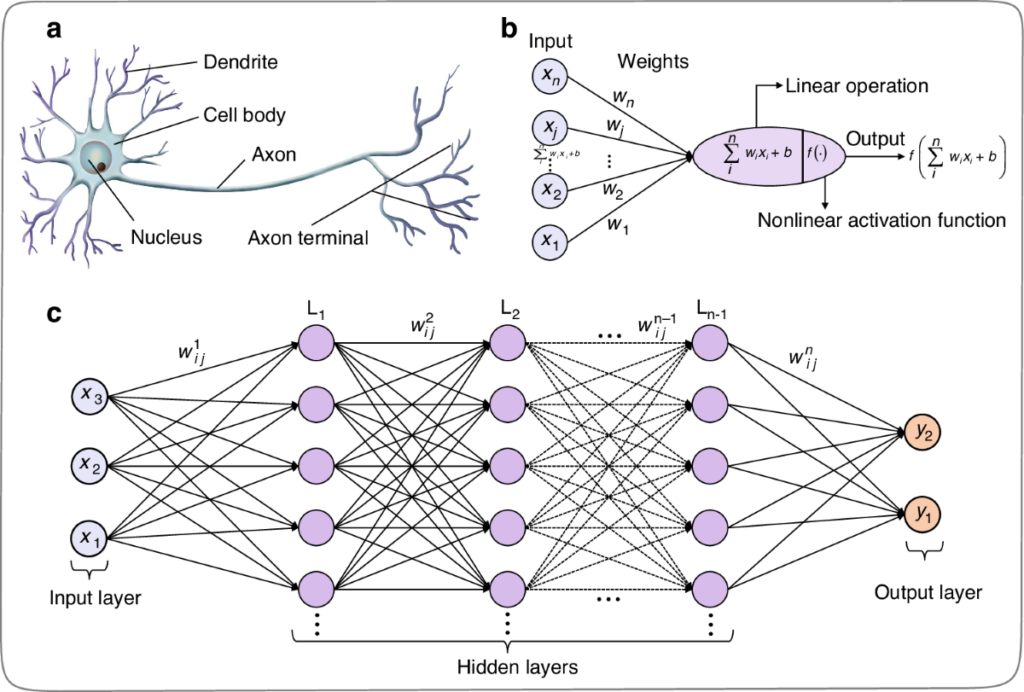

🧠 AI Training

- Purpose: Teach the model

- What happens:

- Forward pass

- Backward pass (backpropagation)

- Weight updates

- Characteristics:

- Extremely compute-intensive

- Repeated millions or billions of times

- Runs for hours, days, or weeks

💬 AI Inference

- Purpose: Use the trained model

- What happens:

- Forward pass only

- No weight updates

- Characteristics:

- Lighter computation

- Highly latency-sensitive

- Often runs continuously in production

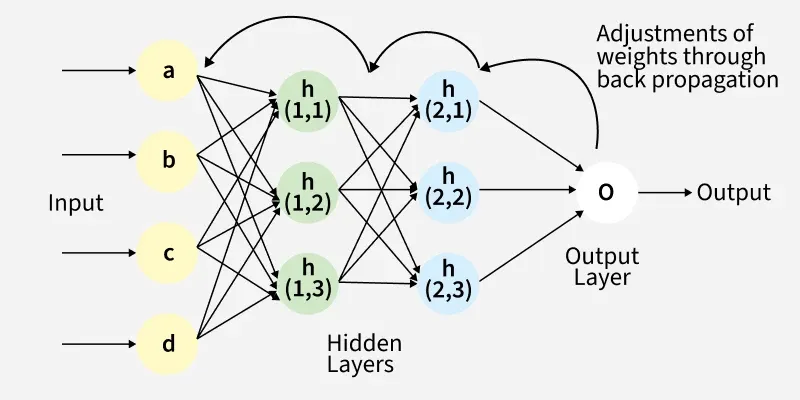

What Training Is Really Computing (and Why It Eats GPUs)

Key Properties of Training Workloads

- Massive matrix–matrix multiplication

- Backward propagation doubles the compute

- Intermediate activations must be stored (high memory usage)

- Can run at full utilization for long periods

👉 These are exactly the workloads GPUs—especially CUDA-based GPUs—are designed for.

Training GPUs must prioritize:

- Raw FP16 / BF16 / FP32 compute

- Large numbers of GPU cores

- Multi-GPU scalability

- High-bandwidth VRAM

What Inference Is Really Computing (and Why It’s Different)

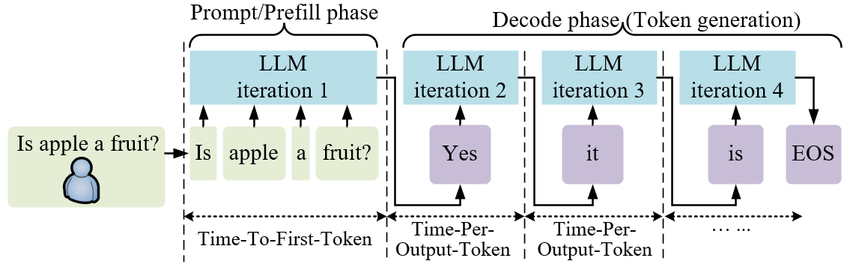

Key Properties of Inference Workloads

- Forward pass only (no backpropagation)

- Token-by-token or small-batch execution

- Extremely sensitive to latency

- Often serves many users over long periods

👉 Inference is not about “maximum speed” —

it’s about consistent response time and efficiency.

A Crucial but Often Ignored Difference: Time Scale

Training Time Perspective

- Minutes, hours, or days are acceptable

- Slight slowdowns don’t matter

- Completion matters more than responsiveness

Inference Time Perspective

- 50–100 ms delays are noticeable

- Latency spikes degrade user experience

- Systems must remain stable 24/7

👉 Inference behaves like a real-time system. Training does not.

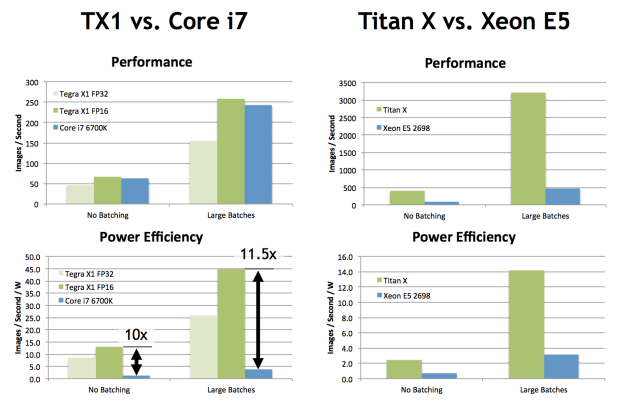

Why Inference Does NOT Always Require a GPU

Because compute is often not the bottleneck.

Common inference bottlenecks include:

- Whether the model fits in memory

- Token-generation latency

- CPU–GPU coordination

- IO, batching, and scheduling overhead

📌 This is why:

- Apple M-series chips

- Small GPUs

- Even high-end CPUs

👉 Can be perfectly sufficient for inference workloads

Training vs Inference: GPU Requirement Comparison

| Aspect | Training | Inference |

|---|---|---|

| Primary goal | Learn the model | Use the model |

| Computation | Forward + backward | Forward only |

| GPU compute | Extremely high | Moderate |

| Memory usage | Very high | Model-size dependent |

| Latency sensitivity | Low | Very high |

| Hardware flexibility | Mostly GPU-only | CPU / GPU / NPU |

How This Difference Impacts Hardware Selection

If Your Workload Is Training-Focused

You should prioritize:

- GPU model and compute capability

- CUDA or ROCm ecosystem

- Multi-GPU scalability

- Power and cooling capacity

👉 Data-center mindset

If Your Workload Is Inference-Focused

You should prioritize:

- Memory capacity

- Latency stability

- Power efficiency

- Deployment and operational cost

👉 System architecture and user-experience mindset

Why People Often Choose the Wrong GPU

Because they treat training and inference as the same problem.

Common mistakes:

- Using training-class GPUs for personal inference (overkill)

- Expecting inference hardware to train large models (impossible)

- Comparing FLOPS instead of latency and memory behavior

One Sentence to Remember

Training is about building the model.

Inference is about serving the model.

Building requires brute force.

Serving requires efficiency and stability.

Final Conclusion

Training and inference are not the same task at different scales.

They are fundamentally different workloads with different optimization goals.

Understanding this distinction helps you:

- Choose the right GPU

- Control costs

- Design better AI systems