When teams start adopting RAG (Retrieval-Augmented Generation), a common question appears:

“Should RAG be part of training?”

“Should we fine-tune the model with our documents instead?”

Before going any further, here is the most important conclusion:

👉 RAG almost always belongs in the inference layer—not the training layer.

This is not a tooling limitation.

It is a fundamental architectural truth.

One-Sentence Takeaway

RAG exists to retrieve data and assemble context at runtime.

That makes it an inference concern, not a training concern.

First, Clear a Common Misunderstanding: RAG ≠ Teaching the Model

Many people think RAG means:

“Making the model remember company documents.”

That expectation is incorrect.

What RAG actually does:

- It does not change model weights

- It does not retrain the model

- It does not store long-term memory

👉 RAG simply supplies relevant information just before the model answers.

In other words:

RAG is real-time context injection, not knowledge training.

Why RAG Is Naturally an Inference-Layer Component

All three core actions of RAG are pure inference behaviors.

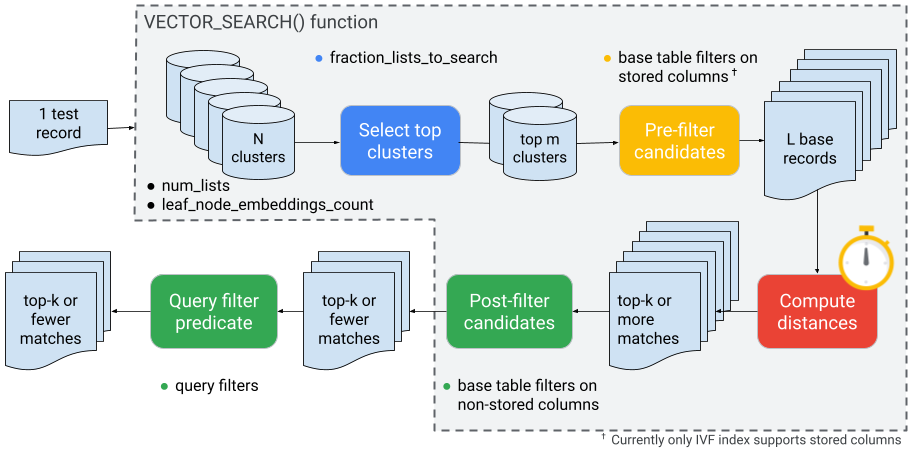

① RAG Is Real-Time Retrieval, Not Offline Learning

The first step of RAG is:

- Take the user’s question

- Run a vector search

- Retrieve the most relevant documents right now

📌 The key word is now.

- Documents change

- Policies change

- Answers depend on current data

👉 This kind of dynamic behavior cannot be precomputed during training.

② RAG Affects Only the Current Answer

Documents retrieved by RAG:

- Are injected into the prompt or context

- Are discarded after the response

- Never modify the model itself

📌 This means:

- No learning

- No persistent memory

- No behavioral change in the model

👉 That is the definition of inference.

③ RAG Requires the Latest Data, Not Historical Snapshots

If documents are embedded into training or fine-tuning:

- New documents → retrain required

- Corrections → retrain required

- Deletions → often impossible to undo

📌 For enterprises, this is extremely risky.

👉 Inference-layer RAG allows instant updates without touching the model.

What Happens If You Put RAG in the Training Layer?

Here are the real problems teams encounter.

❌ Problem 1: Cost Explosion

- Training and fine-tuning require GPUs

- Every document update triggers retraining

- Cost scales with document volume

👉 RAG exists precisely to avoid retraining.

❌ Problem 2: Stale or Irreversible Knowledge

- Old documents become embedded in the model

- Deleting knowledge becomes impossible

- Compliance, legal, and policy risks increase

👉 Inference-layer RAG allows immediate removal of documents.

❌ Problem 3: Uncontrollable Model Behavior

- Document quality varies

- Biases become baked into weights

- Debugging is slow and expensive

👉 With RAG, mistakes are temporary—not permanent.

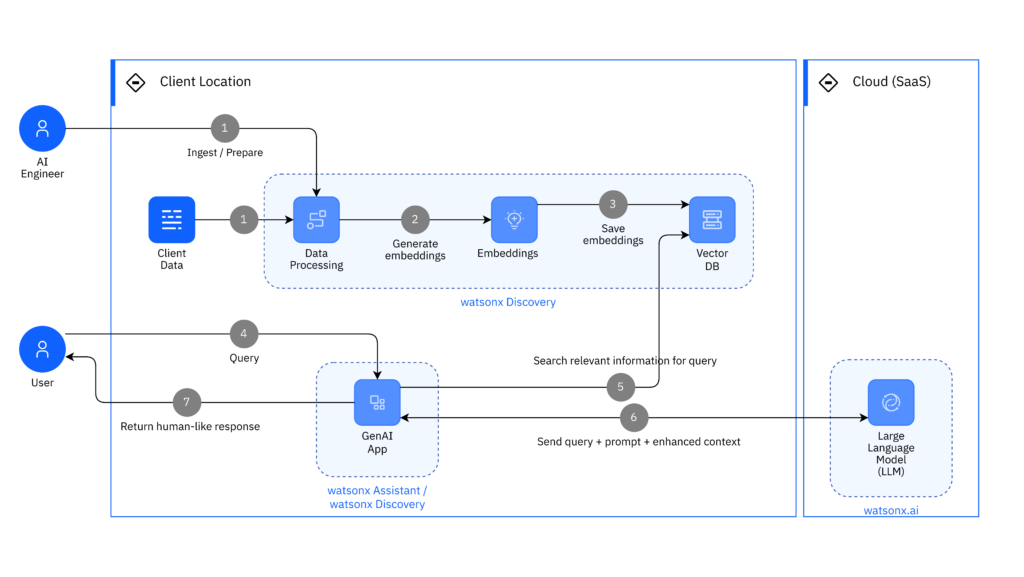

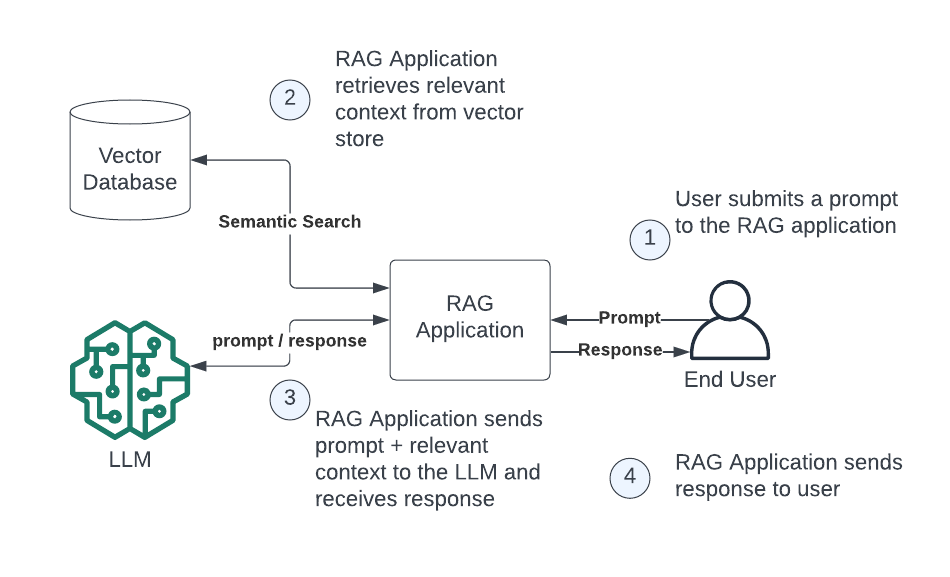

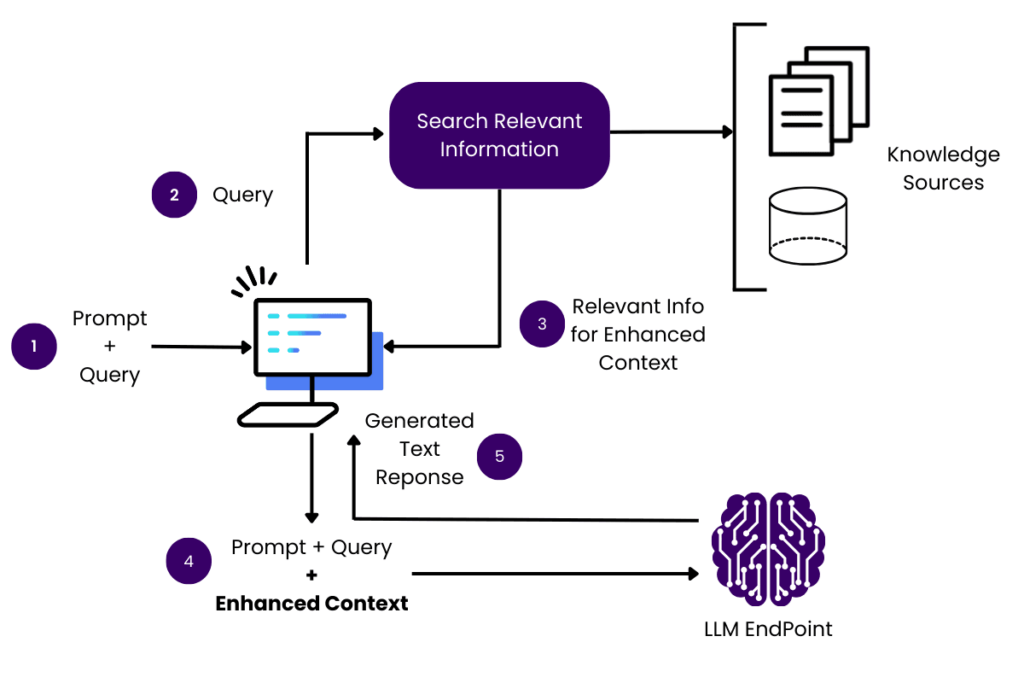

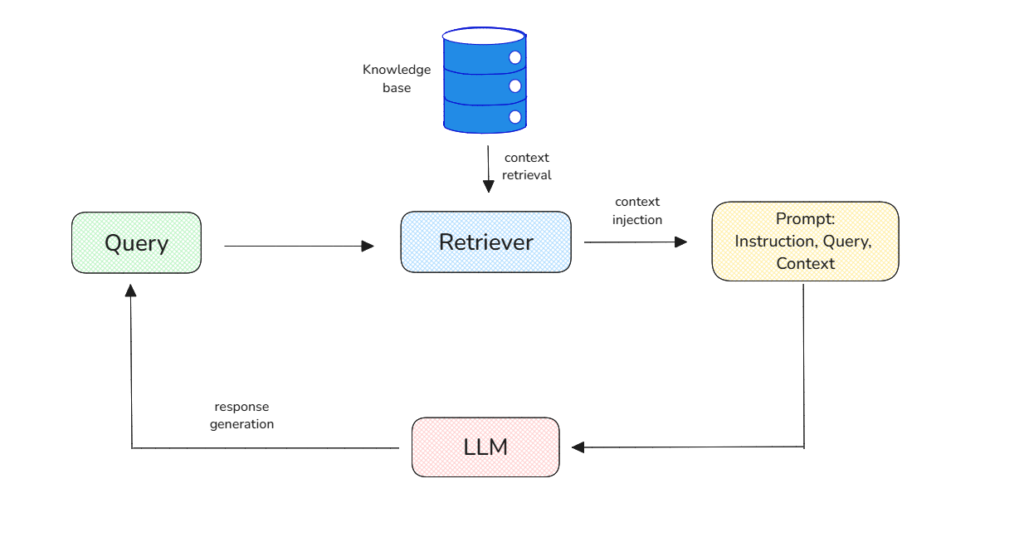

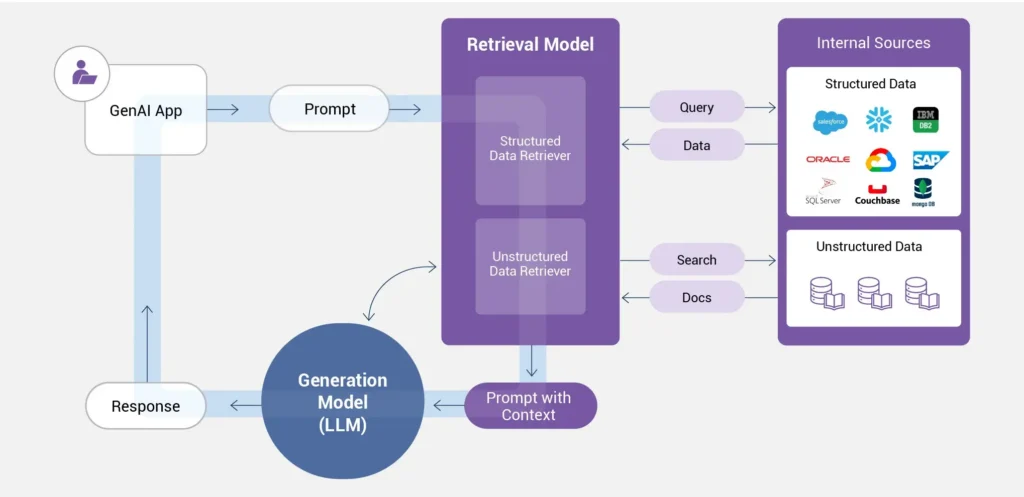

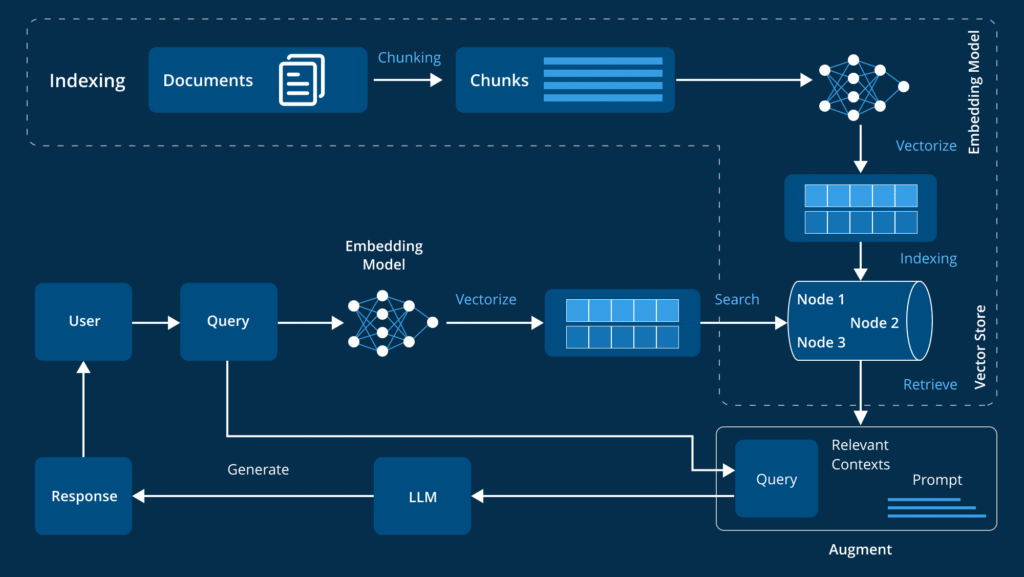

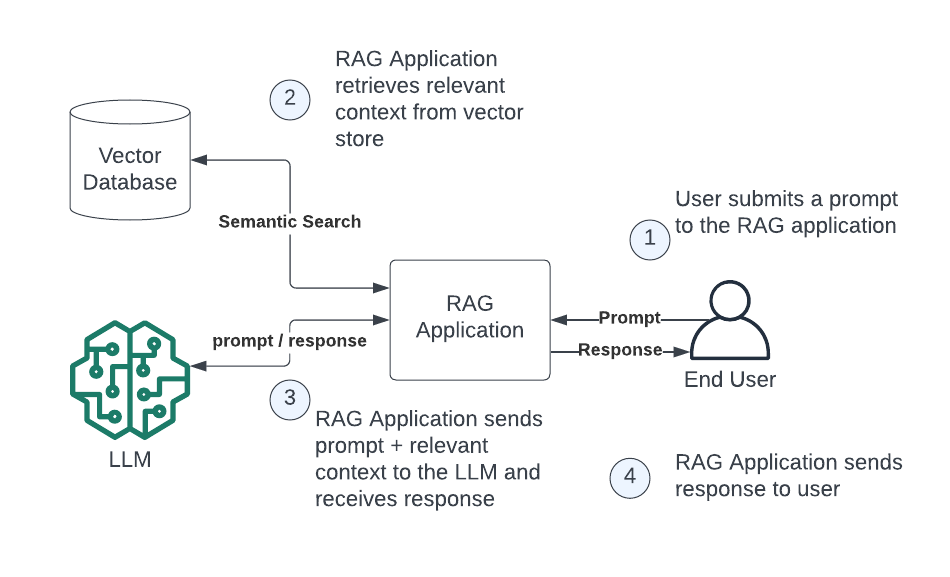

What a Correct RAG Architecture Looks Like

A production-ready RAG flow looks like this:

- User submits a question

- Query is converted to embeddings

- Vector database performs retrieval

- Top-K documents are selected

- Context is assembled

- Prompt is sent to the LLM

- Answer is returned

📌 Every step occurs during inference.

What Should Be Handled by Training or Fine-Tuning?

Use this simple rule:

Suitable for training / fine-tuning:

- Writing style

- Response format

- Reasoning patterns

- Task skills (classification, summarization, extraction)

Not suitable for training:

- Company documents

- Policies and procedures

- Contracts and legal text

- ERP / SOP / operational data

👉 Skills belong in training. Knowledge belongs in RAG.

One Sentence to Remember (Very Important)

RAG answers “Where is the information?”

Training answers “How should the model behave?”

Final Conclusion

RAG must live in the inference layer because it performs real-time data injection—not learning.

Putting RAG in the wrong layer leads to:

- Exploding costs

- Higher risk

- Poor maintainability

Putting it in the right place delivers:

- Flexibility

- Control

- Long-term scalability